Been working on testing the SPDIF DAC box I got from China. I made an FPGA-based tester that exercises it:

http://www.mediafire.com/download/ktx2vw3x38o9f4a/spdif_tester_2014-09-13.zip

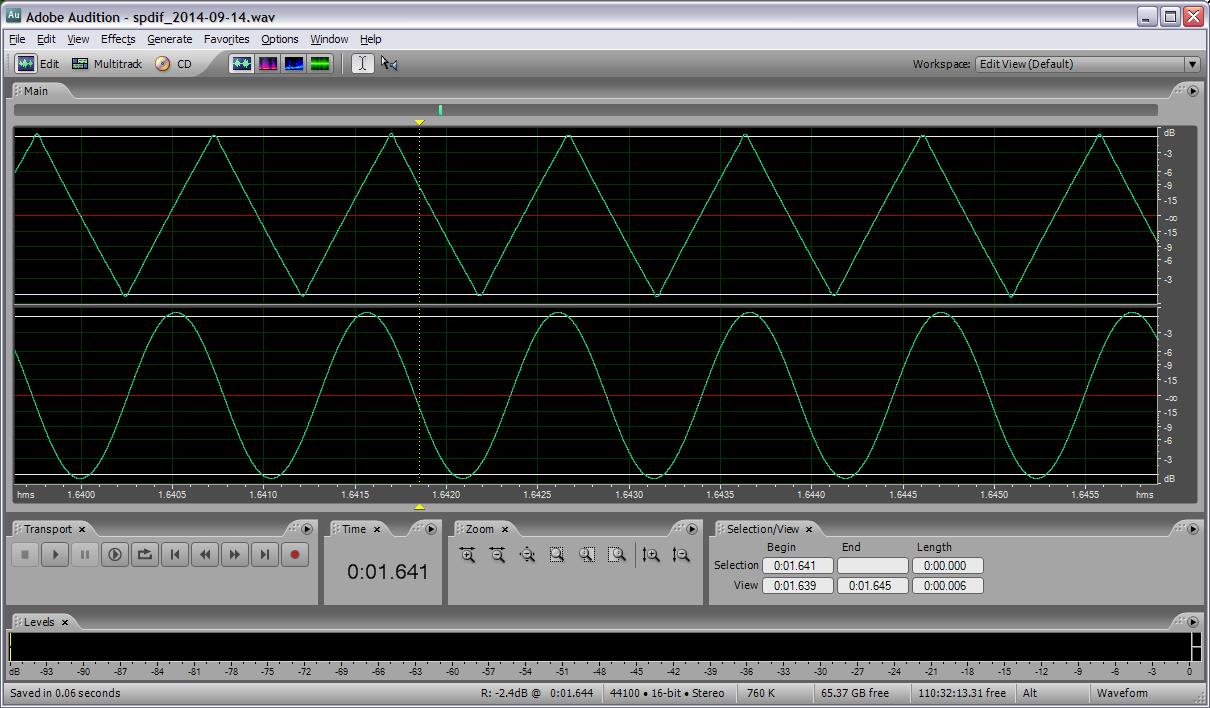

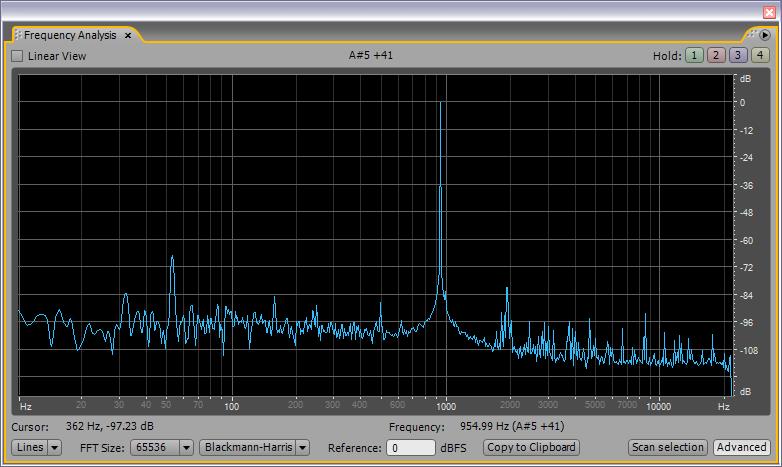

It works. The tester sends a sine wave and a triangle wave, both around 1kHz but not quite the same frequencies, to the left and right channels. The analog looks fine on my scope but I don't have the equipment to do much in the way of distortion or noise floor analysis.

One mystery I can't figure out is why this box has less digital headroom than I would expect. If I send it ~0dB there is severe clipping, and moreso on the negative swing. I have to cut the digital signal back to -12dB or so (signed right shift by two bits) before the clipping goes away. This gives a fairly healthy 1Vp-p analog output, but the resolution is obviously limited to 14 bits if one is using 16 bit samples. I suspect the box will do more than 16 bits as the interface and DAC support it, but I haven't messed with that yet. I don't want to design my stuff around the limitations of this box though.

The electrical SPDIF interface ground requires a good connection to the FPGA ground otherwise there will be huge amounts of digital noise (~1V) on the analog side. I've seen single ended serial ports really mess with audio, IMO they should be balanced current loops or optical for musical use, particularly if there are frequencies on the serial line that reach down into audio.

The SPDIF interface is (as these things go) typically overly complex. The framing and encoding are OK I guess, but they implemented superframes for data subchannels which idiotically contain completely different data sets for the pro and consumer versions of the interface. It's hard to tell exactly how and for what reasons the vaguely defined and sometimes conflicting data will be employed by the end equipment (from a SPDIF implementation guide which is normally meant to clarify things):

"A scheme for providing copy protection is also currently being developed. It includes knowing the category code and then utilizing the Copy and L bits to determine if a copy should be allowed. Digital processing of data should pass through the copy and L bits as defined by their particular category code. If mixing inputs, the highest level of protection of any one of the sources should be passed through. If the copy bit indicates no copy protection (copy = 1), then multiple copies can be made. If recording audio data to tape or disk, and any source has copy protection asserted, then the L bit must be used to determine whether the data can be recorded.

"The L bit determines whether the source is an original (or prerecorded) work, or is a copy of an original work (first generation or higher). The actual meaning of the L bit can only be determined by looking at the category code since certain category codes reverse the meaning.

"If the category code is CD (1000000) and the copy bit alternates at a 4 to 10Hz rate, the CD is a copy of an original work that has copy protection asserted and no recording is permitted."

Much of the needless complexity is clearly due to copyright issues, which is always a can of worms for everyone involved. The above reads like the SPDIF protocol was designed by the three stooges.