Found a nasty flaw in the way I was parsing labels. Explicitly assigned labels work fine, but those that are implicitly assigned can be broken because addresses can require one, two, or three opcode slots when assigned to a literal, which can move all of the code forward zero, one, or two opcode slots per literal depending on the address value. But the label address value can be dependent on the location of the label, so it's a chicken / egg thing. For instance, say we want to place a label value on stack 0, but the label is implicitly assigned somewhere higher in address space. We can't do the assignment unless we know the address, and we can't know the address unless we do the assignment!

One can work this sort of problem out on paper, so a solution exists. But formally coding it is another thing, particularly with a fixed number of passes over the code. One could probably gather all the info and do some sorting and thresholding, but it may be easiest to do repeated passes over the code, updating the label table, and exiting when the table stops changing. This will be the first approach I try. If labels weren't so useful I'd just drop it altogether, but I'm finding coding with them really speeds things up and removes a lot of pain and bookkeeping.

===========

Am also polishing the command line interface, when it's done it should be easily extensible to do other things. Engineers get criticized for spending inordinate amounts of time automating and optimizing things (XKCD), time one may not make up by making those things run more smoothly. But this fails to take into account all of the necessary experimentation and self-training going on - which enables future projects to be developed that much faster. If we could live forever an engineer might be your go-to person for getting lots of stuff done quickly because they will have worked long and hard on lots of problems, with ready solutions to many thorny issues. As for premature optimization, well it's often difficult to tell where you are in a project, so it can be difficult to tell if your efforts are indeed premature. Experts will tell you to make a quick prototype, then toss it and make another, etc. but often the project isn't a good fit for that advice. E.g. my Hive simulator code is about as rats-nesty as I can tolerate / follow, and I often need to add features to it which isn't all that easy, but I'm not about to scrap it and start over.

===========

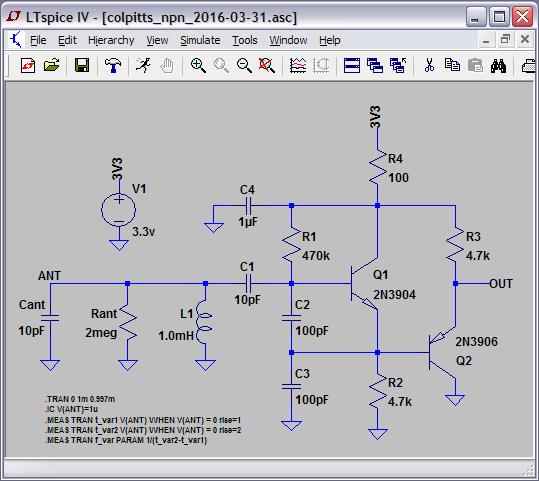

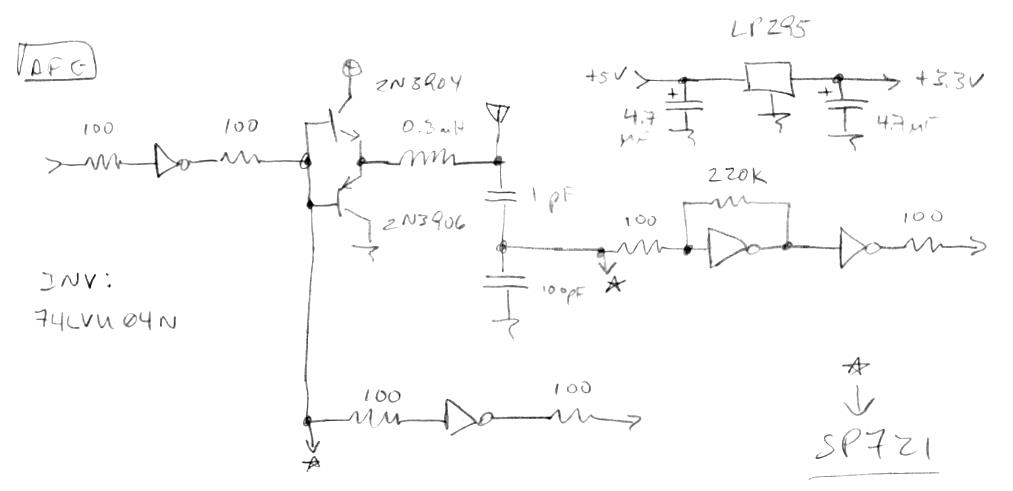

Once the above is behind me I will attempt an experiment in order to characterize the noise at the pitch antenna. In the DPLL, the operating point numbers are fed to a 4th order low-pass digital filter at double the edge rate (~5MHz). This filter lops off noise starting at ~1kHz and pretty much kills everything above 1/2 the sampling rate (48kHz). So I should be able to send the output of this filter more or less directly to the SPDIF TX logic and record it as audio in Audition. From there I will be able to see what is going on both spectrally and in terms of overall amplitude. This will allow me to assess countermeasures implemented in order to reduce the noise in SW or in FPGA hardware (comb filter, variable LPF, etc.).

The numbers from the DPLL are likely good enough to use as-is, but it never hurts to have more precision than you need, particularly when a fair amount of math will be performed on them.