dewster said: "I have a tendency to overthink this kind of stuff"

Christopher

Christopher

dewster said: "I have a tendency to overthink this kind of stuff"

Christopher

Christopher

Strings & Things

I haven't done enough assembly programming yet to really feel comfortable and efficient at it. But I always get this hazy sloging kind of feeling when I'm learning a new language. There are usually best ways to do various things, and it takes a fair amount of bumbling around to discover and become comfortable with them. I'm now able to do some limited HAL coding directly in the editor, but it's still easier to do serious coding in pencil in my notebook.

But I have done enough assembly programming to clearly see the utility of C type strings, where an arbitrary number of byte characters are terminated with the null (0) character. This construct is quite handy and compact for things like fixed messages (error, info, etc.). But it's a bit dangerous, and it's not suited for general purpose string manipulation. With the same overhead for short strings (<256) one could use the first byte of a string to indicate the length of the string, and this would be safer and really useful all-around. Or using the first two bytes of a string for length could accommodate super long strings (~ 1 chapter of a book). Or use one or more of the first byte codes to indicate more indexing info in the next byte or bytes, etc. Any type of simple string can be a useful intermediary target for input and output formatting functions, so e.g. you only have to write one kind of 32 bit HEX to ASCII string conversion routine, one string to UART routine, one string to LCD routine, etc. But you need an index to do any kind of string manipulation efficiently, which is why one of our first labs in C++ in college was to write a string class.

Yesterday I made the byte and word reads in Hive signed. ASCII is nice in that it doesn't utilize the MSb of the byte, so signed/unsigned is moot. And I made several HAL language constructs implicitly signed. So Hive / HAL are now basically signed, with some explicit unsigned stuff. Byte addressing is still kind of haunting me, can't seem shake it.

"4. Night club "Hive" (now)." - ILYA

Ha ha! Probably some great "threads" in there too!

============

Some progress: I've merged the CLI code with my pitch testing code, and I've written a subroutine that converts and writes a 32 bit hex value to a string. Looked at the encoder output on the LCD screen and that's working but could use more gain with faster rotation. Routed the pitch number through the hex string code and am now able to display that on the LCD. Pretty much what I saw via the UART, though this is obviously more self-contained and interesting (~15 steady bits over the hand range with ~12 bits of bobble). Pitch uses thread 0, CLI uses thread 7, LCD stuff is done with the thread 7 interrupt. So I'm at the point where can I route intermediate math numbers in memory to the LCD and snoop on things that way.

I seem to spend my days alternately writing one or two smallish subroutines and integrating them into the code - which often breaks things so I spend the following day tracking down bugs and polishing. I'm not complaining because it's not entirely unpleasant. Stack machines are good/bad in that when the code is slightly broken everything tends to break, and Hive has 8 threads sharing a single Von Neumann memory (data & opcodes) so it's extra achy-breaky.

But at this point I realize the mild feeling of dread I have regarding the process is avoidance of the paralyzing befuddled state that results when confronting even minor technical debt in a largish project. And I think this is the reason developers often practice the "evil sin" of premature optimization: there's just too much context switching going on when bugs come out of the woodwork, any one of which may require a multi-day deep-dive on the side to fix. Combine even the most air-tight subroutines on the most well-crafted logic and you'll get bugs, so why go looking for trouble?

This has been a really big (though extremely enjoyable and edifying) project for me (processor, simulator, assembly language, integer and float math packages, CLI - on top of all of the Theremin & DSP stuff) so I've had to be extra vigilant in order to get anything accomplished in the longer term. The only thing I wish I'd done differently is the core of the simulator - I probably should have written the C++ code to better mirror the actual hardware, with explicit pipelines and such. The initial writing and subsequent editing would likely have been much easier. It does help that verilog and C share much of the same syntax.

The more I code with indexed strings the more I realize null-terminated strings aren't worth dealing with at all. I'm now using a string to hold the pre-tokenized CLI input, a temporary string to hold hex to ASCII converted data for CLI output, and four strings for the LCD display buffer (one for each display line, with auto-space padding at the end). To manage the complexity I'm trying to keep the assembly code as modular as makes sense, even if it means forfeiting some execution time efficiency.

Was writing assembly and noticed I didn't have a way to stick a non-zero value in memory without it being somehow associated with or interpreted as an opcode. I only had a way to initialize and mark contiguous portions of memory to zero, my Z16[] and Z32[] commands. So I generalized these to allow reserve & initialization to a value:

# lit

## lit

#[reps]

##[reps]

The first above allows initialization of a single opcode space (16 bits), the second two opcode spaces (32 bits). The last two initialize multiple spaces, but only allow initialization to zero. The first is the "real" HAL code, the last three are converted to it via pre-processing.

I also changed the NOP opcode to be POP with a zero immediate, rather than an unconditional zero immediate jump. This seems a bit safer, and is linguistically attractive as well (NOP = no pop). There are pluses and minuses to not having an entirely separate, reserved opcode for NOP (mostly in the simulator code).

Finally, I added a readable "interrupted status" field to the vector register. For safety, interrupted threads can't be "re-interrupted" until they have returned to normal operation. Reading the status repeatedly (e.g. via the CLI) gives a rough (statistical) indication of how much time a particular thread is spending servicing the interrupt, which is kind of handy. I once saw a programmer hook a scope up to a GPIO pin to observe this directly (the IRQ code would set the GPIO high at entry and low at exit). Real-time estimates can get kinda murky when executing higher level code, with caches and such confounding things even more.

Floats & Efficiency

The main reason I'm skittish about using floats in real-time processes is the very real-time they consume when you don't have dedicated float hardware in the processor. Most of the multipliers in the FPGA fabric are sitting idle (there is an 18x18 multiplier for every two block RAMs, so 15 mults in the E6 part, and 23 in the E10 - the Hive ALU currently uses 4 to do a 33 x 33 = 66 bit signed multiplication) so down the road I may somehow integrate some sort of co-processor.

What's going on in these float routines? There's input normalization, and this can include the normalization of input denorms. There's usually signed to unsigned conversion because this allows for the simplest calculations and provides the most headroom for them. Then there's the actual function we're trying to do in the first place, which may be a single cycle add / subtract / multiply, or a short unrolled loop of Newton's method for inverse, or "unrolled" polynomials for sin, cos, exp2, log2, etc. Then there's normalization of the result, and often sign calculation or restoration. If the answer is undefined this must somehow be conveyed to downstream processes (for conventional floats this is via in-band signalling, or special reserved patterns in the float itself).

So there's lots of time consuming piddling around both leading up to and upon exiting the core function. Conventional floats are severely constrained to fit in a power of 2 bits (32, 64, etc.) space, so there is even more farting around to make this storage as significant and precise as possible. Mathematicians require accurate rounding, which sometimes means a nominally 32 bit result must be worked out to 64 places just to know how to round. And hardware floating point units often punt when it comes to input denorms, trapping to software or microcode routines that are orders of magnitude slower than the normalized hardware path, which can also trap the unwary coder trying to do DSP on a general purpose CPU.

Some obvious optimizations for real-time DSP are:

1. Forget about rounding.

2. Don't use packed floats.

3. Doing (2) lets us skip either the input or the output normalization step.

(2) makes storage less efficient but sidesteps unpacking and packing, and also increases the significand and exponent resolution.

For (3) I'm thinking that skipping output normalization is probably the most efficient because input normalization is usually tied to the changing of signedness, and often also incorporates a simple but necessary change of variable to make a polynomial approach tractable. Many results are already fully or mostly normalized, and normalization of a result often doesn't do anything to increase the resolution of that result, so if we aren't space constrained and we normalize all inputs, then result normalization may be a waste of time (though the presence of unpacked denorms can make the mathematical comparison of two numbers more difficult).

Signalling is a tough nut to simplify, there's got to be some way or ways to do it, perhaps not in-band? The DSP cases for signalling can likely be pared down to a small handfull of the usual suspects (NaN, +/- infinity, etc.). Or maybe track this stuff in the higher algorithm ("hmm, maybe I don't want to send a negative number into the square root routine, or divide by zero, etc.").

A first pass at a hardware float unit might be to have it just quickly perform all the time consuming fiddly bits associated with the set up, staging, and tear down of of float operations (unpack, norm, change of sign, change of var, renorm, repack, etc.) and do the actual core functions themselves in short software routines. It would have to significantly lower float routine cycles for me to undertake the design and implementation of such a unit, as sharing the unit among threads could make it rather complex and cumbersome to use via the register set interface. I suppose if it had dedicated opcodes it could simply be an optional part of the ALU.

A second pass might do all that along with add, multiply, and inverse.

Other reasons not to like floats in DSP routines: exponent packing necessarily decreases the resolution of the significand, and this can lead to limit cycles or strangely high noise floors in low frequency audio filters if using 32 bit floats.

Some Hive float subroutine real-time inventory:

FUNC CYCLESadd 55sub 59mul 30sqr 23inv 47sqrt 53exp2 59log2 53 These are maximum cycles for each function, 32 bit significand and 16 bit exponent, normalization of inputs and outputs, flush to zero for small denorms. The add and subtract are surprisingly expensive, and this is because there is more set-up before the actual add can take place.

For operations that have to happen every 48kHz, there will be approximately 500 cycles to play with per thread, so around 10 float operations could happen in that interval per thread, or a total of 80 floats if all threads were employed.

I'm hoping to do all pitch and volume data capture, filtering, and linearization with at most with two threads (one thread each).

I have the feeling that flushing small denorms to zero is a more OK thing to do when the exponent has a wider range than the normal packed float exponent of 8 bits. A 16 bit exponent makes the "hole around zero" much smaller.

==========

Spent the morning reading about Risc-V, an open processor project that's starting to hit silicon. Floats are handled via register file and ALU expansion. Patterson (of Hennessy & Patterson / Patterson & Hennessy textbook fame) seems to have had a lot of input. I agree with most of the choices, though I'm not sure homologizing the ISA's (instruction set architectures) across multiple data widths is all that necessary and it could tie the designers hands. I don't like the absence of a leading zero count instruction, and making the zeroth register always read zero is an old trick that strikes me as wasteful. It's cached and pipelined with the usual branch prediction stuff and security level hardware.

I applaud any efforts to kill off the Intel ISA as soon as possible, and ARM should probably go by the wayside as soon as there is a decent open spec like Risc-V appears to be. I'd really like to see the whole external memory chip thing gone too, processors should live smack dab in the middle of a sea of memory. Architectures could get radically simpler if that were the case. And security detail belongs in a separate processor IMO.

Floating By

The IEEE floating point standard is really remarkable, they thought of everything! I suspect there's a long history behind it, with many hard(ware) lessons learned, it's great to encounter a spec that makes 100% sense.

But the fields are packed together - which is no trouble for hardware because bundling buses this way or that is essentially free - but in fixed-width hardware the packing and unpacking isn't free, and the packing itself imposes narrower precision and dynamic range limits than necessary on 2^n width calculations. For my own stuff I picked a 32 bit signed significand with 16 bit signed exponent, and indeed this form is also discussed somewhat in the IEEE spec. But even if you normalize all values in and out of functions, the maximum negative significand value can cause problems because it has no corresponding positive value. It's a corner case that you have to include special tests for all over the place, which slows things down.

I'm playing around with a IEEE style sign & magnitude significand, where the sign is either the MSb of the significand, or in a separate register. I'm also playing around with an implied '1' to the left of the significand, which makes all input normalized by nature, but since zero is a denorm it must then be "signaled" - and in the IEEE packed float this via reserved codes in the exponent, which makes a lot of sense because this only slightly impacts the dynamic range while keeping the precision maximal. If the sign is in a separate register one can "signal" zero via a zero there, with +1 and -1 values for pos & neg. Keeping me busy writing the floating add and multiply routines with the various representations.

One thing that really slows floating point calculations down is the significand normalization and exponent limiting done at the end, which is absolutely essential if the float is packed, but can be significantly relaxed or perhaps even postponed until the result is stored in memory if the exponent sits in a 32 bit register between calculations.

DSP calculations are often "fast and loose" because maximum absolute precision usually isn't required. For instance, a non-packed floating point multiply can be as short as 8 instructions if the least significant bit isn't important. I gotta keep remembering I'm building a Theremin, and not a scientific pocket calculator.

ADD_F : Artisanal, Curated, Handcrafted, Bespoke

OK, I'm trying out a float representation with:

32 bit unsigned magnitude with MSb = 1

16 bit signed exponent (not offset for now)

8 bit signed sign (-1, 0, +1)

The MSb = 1 unsigned magnitude means I/O values are explicitly normalized. This is simpler and more straightforward to implement than an implicit '1' scheme. The MSb = 1 obviously cuts resolution to 31 bits, but 32 bit range is restored with a separate 8 bit sign (this was a desired goal). The separate sign allows for easy zero, but more importantly this arrangement gets rid of the max negative 2's complement value that plagues the signed magnitude approach.

Using this float representation I've been able to pare the float addition down to 19 cycles maximum, and float multiplication now clocks in at an almost trivial 7 cycles maximum. With unsigned magnitude & separate sign you have to micro-manage addition (subtract when the input signs differ) but it's still significantly more efficient. Zeros are generally constrained, but really large exponents aren't - I'm thinking of constraining them at the point of use / storage. I may switch to an offset exponent to facilitate this once I convert the other functions (EXP2_F, LOG2_F, etc.) to this format.

One thing I've finally got something of a handle on is the structuring of these functions. Since this is very much "inner loop" code it must be brutally minimized. The optimal general form seems to be a series of quick tests / jumps that deal with zero inputs (zero is a denorm) and then sort out the various treatment scenarios, with a final normalization. Minimization of the longest path is the order of the day, so if code must be duplicated in the various branches to do this then that's what you do. As long as the other branches are shorter than the longest one then they're kosher, and they can easily take care of output zeros, cleanup, etc. in the cycle slack they have. It's very much the balancing out of a binary decision tree.

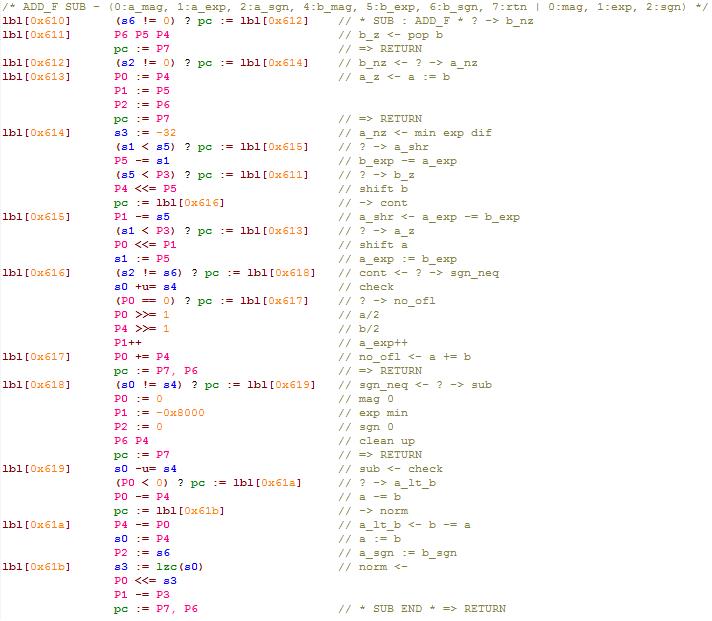

Above is the current ADD_F function which I'm testing now. It's rather nuanced and took me almost two days to write!

You must be logged in to post a reply. Please log in or register for a new account.