I showed you my path to wealth which could have financed your project but it is gone now, you may have been distracted by the trees. Some day when you are tired the two great walls to climb, in front of you, will be packaging and marketing.

I showed you my path to wealth which could have financed your project but it is gone now, you may have been distracted by the trees. Some day when you are tired the two great walls to climb, in front of you, will be packaging and marketing.

"I showed you my path to wealth which could have financed your project but it is gone now, you may have been distracted by the trees."

I appreciate that, but at this point even a guaranteed get-rich-quick scheme is a distraction. I'm not rich but I've got enough money.

"Some day when you are tired the two great walls to climb, in front of you, will be packaging and marketing."

Yes, not exactly looking forward to that. One of the few things I miss about working for a large company is the way other departments would handle physical design, manufacturing, and sales.

Variables and Pointers

At it's most basic and mechanical, programming is assigning data values to memory slots. Memory slots are consecutively numbered from zero, and this numbering is the address. Addresses are obviously unique, and an address can hold any data value. Addresses are generally full-width (i.e. 32 bits wide in a 32 bit machine) but the value at the memory address can span one or more address slots, be signed or unsigned, etc. In other words data is "typed" or needs extra information to properly evaluate it.

Programming languages support names that are convenient aliases for constant and variable data values, which may or may not exist at actual memory addresses. Opcode (processor instruction) data is inferred from the code. Data may be used to statically size constructs like arrays, and in this case serve more as a build parameter that gets removed at compile time. Data may make it through compilation and remain fixed (a constant) or it may be changed during run time (a variable). Data may exist in memory, or may only "live" for a time in processor registers. Data values in memory may be indexed by the address, immediate (contained in the opcode), or in-line literals (following the opcode).

The C language is something of an odd man out in that it has pointers and allows limited math to be performed on them. Pointers are just alias names for address values. Even though addresses are full-width, pointers are typed - why? So that it's clear what kind of data is being pointed to, and how to do math on the address. Pointers can also be the address of a subroutine or function, and in that case are typed according to the return value.

Say we want an integer variable in C named "d":

int d; // create int

We can assign it the value 5:

d = 5; // assign data

The address at this point is implicit, and we often don't care what it is.

We can create a pointer named "a" with the star:

int * a; // create pointer to int

We can assign it the address of d with the ampersand:

a = &d; // assign address

If we want the data of the thing pointed to we again use the star:

* a // dereference

which is equal to 5.

So we can define a variable name or pointer name without assigning them address nor data, nor even linking them, which is kind of interesting.

Array names are actually pointers, and demonstrate one use of pointer math:

int b[4]; // create array of 4 ints

Here "b" is a pointer to an int. We can assign a value to one element via indexing:

b[2] = 6; // assign data

Or via pointer math:

*(b + 2) = 6; // assign data

When we pass an array to a function we're actually passing it the address of the array, so the array contents can be changed by the function! Presumably this was done to limit needless copying large structures when calling functions, but it's a coding hazard.

I can see where they came from, and how they might be used, but I've never liked pointers in C and have avoided them as much as possible. For one thing I find the choice of syntax confusing. Why didn't they use @ instead of star? Maybe use the ampersand for declaration? Having pointers running around uncoupled seems dangerous, and mechanisms such as scoping can cause decoupling. Indeed, the use of pointers in C++ is discouraged. One blog comment I read recently stated that modern control structures (if, then, else, while, etc.) have made code more readable and have largely eliminated the need for GOTO and the like, which are too wide open and unstructured. And the object oriented approach has done the same for data structures, largely eliminating the need for pointers.

I discuss the above because I'm still grappling with how to deal with pointer syntax in my HAL assembly language. Really I just need a way to reserve, initialize, and link to a section of memory, and I already have this, but I'm looking to better integrate it into the assembly process. Looking at other assembly languages doesn't help much because they tend to be clunky and super low-level, with really arcane syntax.

Pointer Syntax

There's a lot of shorthand going on in the syntax of programming languages. Terse languages make for easier typing, but this can kind of obscure what is going on. Some shorthand going on with C arrays is that reference to the naked array name is to the base pointer, or address of the first element of the array. Reference to the indexed array name is to the data in the array:

int a[3]; // array of ints

a = 0xf5; // set value of base address

a[2] = 7; // set value of data at location 2

This is kind of odd because you might think that putting braces on a pointer would give you an array of pointers, rather than an array of data with a pointer to the first element.

I've decided to adopt this type of array / pointer syntax for HAL labels, with an '@' at the head to aid parsing, and sizing via the assignment statement rather than via typing:

@thd_0 := 0xd56a // declare label and assign address

@thd_0[4] :b= {1, 0} // reserve 4 bytes, initialize to 1, 0, 0, 0

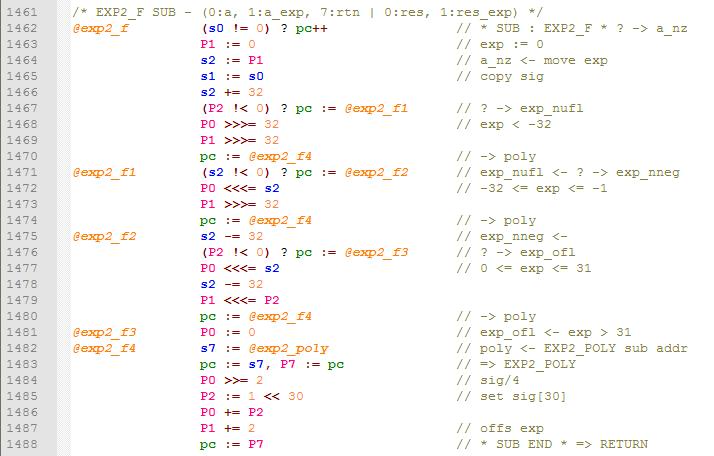

The first step above isn't necessary, if it's omitted then the label address is implicit. Here is an example of the style in a subroutine:

This provides for handy descriptive naming of subroutine labels, as well as very welcomed ad-hoc "scoping" of the label name space within. My previous labels took the form "LBL[#]" with unique numbering, and I found myself wasting a lot of time micromanaging the global assignment / sequencing of those numbers. I plan on using the C++ "unordered_map" to handle label value lookup during assembly.

Went through and really cleaned up the assembly language interpreter C++ code. What I've been calling the "tokenizer" is generally known in the parlance as a "lexer". Played around with alternate methods to recognize multi-character operators but fell back to what I was doing in the first place, which is to put spaces around symbols, tokenize (based on spaces), and then re-clump recognized sequences of operators back to single tokens. So the assembly:

@sin P3:=1<<24

gets "puffed up" (and lower cased) to:

@ sin p3 : = 1 < < 24

Next the two sequences ": =" and "< <" are recognized and reclumped back together:

@ sin p3 := 1 << 24

It bothers me a little that this kind of operator spacing is thus allowed in the language itself, but it's so brain dead simple to puff and clump that it's hard not to do it this way. (Clumping is done with the longest sequences recognized and reclumped first, then the next longest, etc. so as not to confuse subsets of similar longer sequences for the shorter ones. And the minus symbol in front of number is somewhat problematic regardless - is it an operator or a sign?)

I'm using a standard library <vector> of strings to hold the tokens, and a stringstream type to do the tokenizing, which works really well. Using the unordered_map to do the label processing is also working like a champ, now my labels are much more descriptive and useful, and much less fiddly in terms of assignment naming.

At the point now where the rest of the simulator code could use a good rogering. I'm thinking of pulling out all the features I'm not using in order to make it easier to maintain. With an assembly language I'm not going to write code in the sim anymore, so I can get rid of all the editing functions including the undo system. The core sim logic is based on object oriented modules, a situation which is rather cumbersome and confusing to clock. Thinking of going with a two phase clock to each module, with the "lo" clock evaluating the async logic, and the "hi" clock updating the synchronous (flop) values. If I made all module inputs async and all outputs sync, this would remove all possible race conditions when hooking it all together, and things could be clocked in any order, even multiple times (lo or hi) without harm. Like the cha-cha of a clock escapement.

Still at it, doing the general re-write of the assembler and sim code. Not at the sim point yet, but the assembler code is largely done. I've got it kicking out a text file that includes the address, the code length, the code as hex and a disassembled version of it, and a literal flag. It also kicks out the associated assembly source file line number, a super useful bit of info. You can get significant debugging of the whole shebang accomplished by comparing the assembly source, this generated file, and the SV simulation of it.

The switch to byte addressing is making me look at and reconsider a lot of things. I realize I've slowly slouched up to a variable width opcode with no desire nor intention of going there at all. The NOP (no operation) is now 1 byte, one and two operand ops are 2 bytes, one and two operand ops with an immediate value are 3 bytes, and immediate jumps are 2, 3, and 4 bytes. Literals can be thought of as 3, 4, and 6 bytes (if you count the jump over the memory port read). Variable width is good in that it is a form of compression, but bad in that it is more difficult to know what you're looking at in a memory dump, and calculating things like jump distances gets a lot trickier (though the use of assembly labels eliminates much of the guesswork). My old sim would go through memory and flag things that were literal values and, while it never gave me any trouble, I don't think that was actually a valid thing to do. I now use the literal information directly from the assembly process and store it in the memory module (the software version of it, not the hardware). Probably the best part of variable width instructions is they give the designer much greater freedom to do "what if" opcode explorations without worrying too much about shoehorning the new instructions into the opcode space. I wish I'd realized this a long time ago as much of my time has been spent just getting things to fit in the limited 16 bit space.

I've shuffled the basic opcode itself to have the operation byte located in the least significant byte position. The next byte up consists of the two stack select nibbles (in the case of unconditional jump +127/-128 it is the immediate jump distance). The next byte or bytes up form an 8 or 16 bit immediate value. This utilizes the full 32 bit width of the memory opcode read port. Placing the operation byte in the LSB keeps me from being tempted to use portions of it as immediate, but makes for an unconventional numerical ordering of the operations (they aren't grouped by simple arithmetic value anymore) and I suspect this may be one of the reasons processor designers pick big-endian for their architectures? I've long though that big endian was an attempt to make things ordered in memory more like the way we read and write numbers, rather than a desire for elegant design.

Anyway, lots of work to get back to where I was a couple of months ago, and I'm not there yet.

Conjoined Twin Separation Surgery

For the new sim code I decided to break the centralized opcode decoder into localized pieces. Routing the opcode itself through a pipe rather than decoded signals through their own separate pipes seems more straightforward, though it's harder to see the entire decode at a glance. This got me thinking something similar might work for the SystemVerilog code (which describes the actual processor hardware). So today I split the opcode decode in two, one side for the control ring and the other side for the data ring. Whew! I had to examine the code for hours before I began the repartition, and made sure I had a day free so I didn't miss critical details during the process. The surgery is done, and the core is back to building and passing verification, so tomorrow I'll rearrange the register set logic. Then it's back to the sim rewrite.

This exercise has cut many connections between control and data logic, and has brought resolution to many trivial signal "ownership" issues that have nagged me for years. The design is much cleaner now.

Programming languages (PLs) are so weird (to me). Hardware description languages (HDLs) make much more sense (to me) but I suppose that's because they are closer to the metal, and are used by hardware people who are fairly forced to be practical.

One thing HDLs really get right is bit manipulation (signals are indexed bit vectors), which is generally rather cumbersome in PLs (shifting and masking). A direct fallout of this is trivially easy concatenation and separation of sub signals in HDLs.

Another thing HDLs totally nail is signal assignment ownership. Unless the signal is a tri-state bus (unusual in HDL code, and often not even actually real in the hardware if not at a pin) there can only be one assignment driver. Multiple drivers in PL code are trivial to do, and are likely a huge source of bugs. PLs try to mitigate this through object oriented design, where objects "own" their local signals, which are manipulated via a local functions, thus "hiding" things and presenting a simplified interface to the rest of the code base. It's not a bad approach, and HDLs do something very similar with componentization. But object oriented PLs kind of fail when it comes to actually exposing information in a useful manner. In C++ there is no simple "read-only" mode for local state holders (you can approximate this by making a billion trivial functions to access the local information), whereas in HDLs output signals are by definition read-only externally, and input signals are read-only internally.

HDLs also directly support multiple return values from functions (i.e. components) but this is awkward to do in many PLs.

With all the smart technical people working with and on PLs it's a mystery to me why most of them suck so badly, and are such a poor fit to describing and solving everyday technical problems. When coders get together and talk about high-level concepts, the shoddy, fairly unmoored base of their implementations never seems to come up. It's all lambdas, iterators, namespace pollution (prepending std:: to literally everything and making the code generally much less readable), and other (IMO) BS.

=============

My rant above is precipitated by the sim work I'm doing in C++. Beyond dealing with and modeling the synchronous aspects of the HDL, I'm running into gobs of "ownership" situations that don't have clear object oriented solutions. Even though Hive has relatively little state compared to other 32 bit processors, with 8 threads there are a fair number of global state holders that must be readable by much of the logic. Globals (like gotos) are considered harmful, but most of the harmful is due to possible multiple drivers. Every fiber of my being is squealing, but I'm finding it easiest to construct a big core processor class, with all of the state holders just sitting there, and all the functions that operate on them as member functions. That's a big gob of state and a gigantic gob of member functions, with the rampant opportunity to accidentally back drive signals, but oh well. (I tried using "constant" in many of the functional input values, but this massively slowed down program execution, presumably due to unnecessary copying of arrays and such - ??? - the compiler doesn't seem smart enough to handle rational and routine types of needs).

Previously I had a thread class that got instantiated as 8 objects, but it wasn't synchronous and so relied too heavily on order of evaluation to fake the synchronous updating aspects. It worked, but before every modification I had to study it for a week, particularly when tinkering with the register set (where all the threads could interact).

=============

[EDIT] I've since discovered the C++ "bitset" library which indexes the bits in an int and such. This may make the register set easier to implement. And I'm back to using class objects for the main memory and stacks, but with only public elements, as this makes the name referencing of object data and functions more uniform.

Got the last of the core sim code mostly written yesterday - it compiles but (except for the HAL assembly/disassembly functions) is largely untested. The toughest part was the register set, particularly the interrupt register logic. This logic is a single layer of flops with feedback and write precedence, which makes it extra tricky. Feed-forward would have been more tractable, but the read and write operations have to happen in a timely fashion in order to fit with the rest of the pipeline timing. The C++ "bitset" data type was useful, and I was able to "hide" the asynchronous signals inside the clocking functions via old school "static" means. The code is definitely cleaner, but it remains more difficult to follow than I would like. I actually ended up modifying the System Verilog interrupt code as part of this exercise - it was implemented as a bit-slice component in a generate loop, and it is now natively parallel via a more conventional loop. Writing a simulator in a different language gives one fresh insights into the original design, and forces one to revisit and verify many of the critical aspects.

So now it's on to the simulator I/O. I'll still be doing it as text in the XP console, but am considering leaving out many of the features that I never really utilized in the old sim, such as the main memory grid display, the stacks memory display, memory editing functions, command line recall (~doskey), and operation history display. One main screen that shows the state of one thread, including a bit of stack depth and the register set contents, would likely be sufficient. I never really look at the individual opcode field decodes either, and instead rely on the disassembly to follow what's going on.

Got the core running a couple of days ago and added enough visibility (mostly stack state) to debug it today. Just got the last thread to pass verification a couple of minutes ago (thread 4, the one that performs stack and stack error testing). Found a couple of bugs in the verification software because of my decision to make stack errors and reporting of them sticky. Verification really should be done in as many ways as possible, and making a working model of the processor is a great way to do that, as well as making very necessary tooling.

Now to add a few other bells and such to make it a full-fledged simulation environment, but not too much. I suppose the reason I needed to do a re-write from the ground up was because the complexity all the features in the old sim caused the code to be fairly opaque to modification. That, and I have this negative thing for code that I'm not so familiar with - even if it's my own code and I've grown distant from it due to time passing - where I tend to think the writer was a total moron - LOL!. I'm an inverse Will Rogers because I rarely meet code I like.

Trying to squeeze as much coding in before I leave on the usual family vacation, which this year is at Stokes State Parke in SC where, weather permitting, we will be able to view the solar eclipse in totality. I saw a partial eclipse in Michigan when I was a wee budding engineer, via a telescope projecting onto a white piece of paper in a box.

Currently reading "One Jump Ahead: Challenging Human Supremacy in Checkers" by Jonathan Schaeffer. He basically solved checkers, and the book is quite an interesting read, provided you care at least somewhat about computing and/or checkers. His determination seems to have ruined his marriage, which is always something of a cautionary tale (and one that can be interpreted from various angles). Every time I think the way I spend my life is kinda weird I think about those who have acting as a profession, and feel a bit less out there (though I very much appreciate what they do). Books like this one help too, there's no substitute for obsession.

You must be logged in to post a reply. Please log in or register for a new account.