"Wha? I thought *that* was the thing to make stuff portable. One less source of unpredictability, diverging float implementations. At least you know the kind of errors to expect." - tinkeringdude

Kind of. You can still get real-time errors if you don't compile right (turn off SW traps, but then you're turning off denorms and can get other errors). And not all modern processors entirely adhere to the IEEE standard. I think I would have pushed hard for some universal minimal subset that didn't include denorms (but still have them as a defined option in the standard, of course).

"If you don't want computers to be limited to DRAM speeds (ok, they've come a long way, too!), I have not heard of a thing where re-starting the computation world would make this fundamental problem go away."

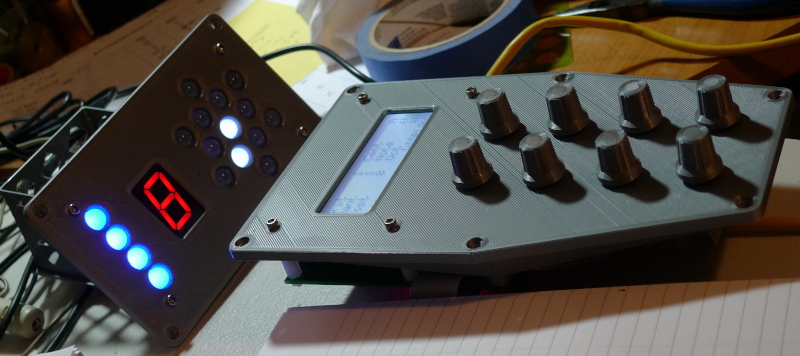

I think DRAM is a big part of the "problem". You keep current DRAM with all the latency at that interface and you inevitably end up with caches. I'd stick a dumb 32 bit barrel processor like Hive smack dab in a field of RAM/DRAM. 32 bit addressing gives you 4 gigs. If you want more memory you stick another processor or three on the board / in the chiplet. Cores and RAM always track, and cores aren't getting all that much faster anymore. You could have 4GB mem & core units optimized for various things like DSP, floating point, graphics, bitcoin mining, crypto, whatever. Big talk! ![]()

[EDIT] I could be way off base, but I imagine 64 bit processors exist to some degree because the floating point unit can then deal with 32 bit integers directly without a loss in precision. 64 bit floats (and the considerable HW support to do that) to be able to deal with 32 bit integers in the float pipe.