Number Formats & Readability

I've been thinking about number formats a lot, and the subject is much more complicated than I imagined. By formats, I mean the usual decimal, scientific, and engineering notations. Much of this complexity is due to limitations of human perception and readability (other complexity is accurately converting the values to text). Some insights:

1. Fixing the decimal digits for scientific notation actually sets the significant digits (to fix + 1) because there is always one digit to the left of the decimal place. This unfortunately isn't the case for the other formats, so the use of fix isn't a general solution to control the display of significant digits.

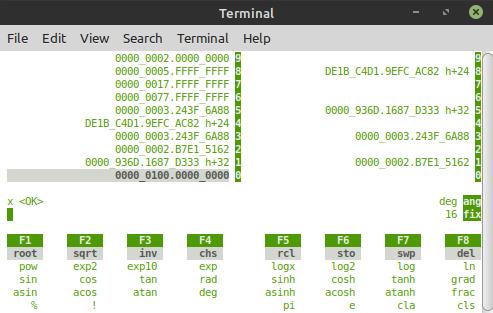

2. Thousands separators (groups of 3 digits) employed to the left of the decimal place can dramatically improve the readability of numbers much larger than one. But separators aren't generally employed to the right of the decimal place, which severely limits readability for numbers much less than one.

3. Too many thousands separators can also be confusing, and one is generally only interested in a "close enough" answer, so displaying only the significant digits necessary, along with a shift value (exponent) can dramatically improve readability.

4. Engineering notation is nice because it lets you use thousands prefixes directly (femto, pico, milli, kilo, mega, etc.). But engineering notation forces a certain amount of "filler" zeros for certain values limited to a certain number of significant digits. For example, "500,000,000" only has one significant digit, but in engineering notation it is displayed as "500E+6" which requires 3 digits (zero padding of 2).

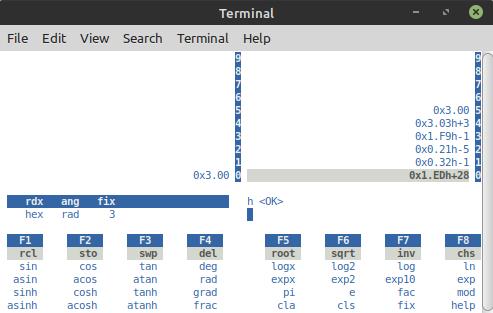

Due to the reasons above, calculators and programming languages usually have a way to force the display of a certain format, as well as a "best fit" type format which selects the display format presumably somehow based on the human readability of the given value. For C++ there is a "best fit" format for base 10 number display that works fairly well, but unfortunately no equivalent "best fit" format for hex display when the input value is a quad float (__float128). It's this lack in C++ which has forced me to look into this issue much more deeply than I had initially intended to.

===============

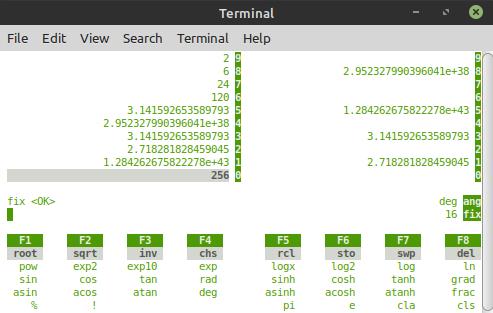

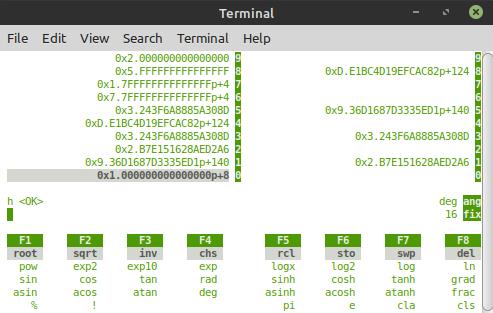

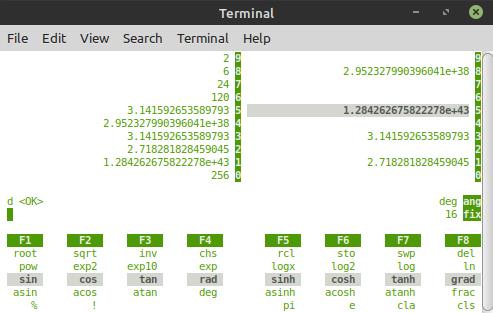

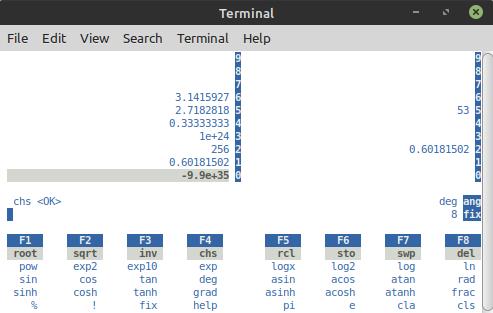

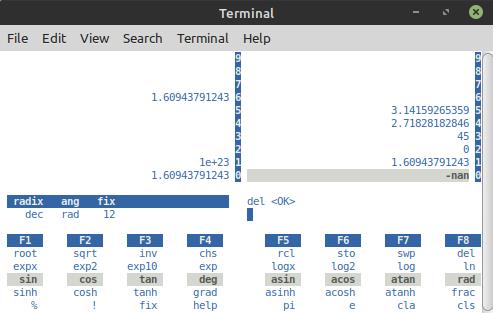

Trying to keeping the coding effort alive, though it's really slow going. Yesterday made a class for the stack so it's easier to interact with via an index (the vector type is a kind of LIFO, but the indexing is reversed to how I label the stack indices). Some basic stack manipulations are now directly handled by the class. I also made command errors show up in a red font in the history, as well as other minor improvements. So the whole thing is basically there and in good shape - except for the actual display of the numbers!

===============

Alternative Scientific Notation Format?

Did "they" blow it when "they" came up with the scientific notation format? I mean, why have anything significant to the left of the decimal place? For example, the value "56.234" in scientific notation is "5.6234E+1" but wouldn't it be better for it to be "0.56234E+2"? A positive exponent then directly indicates how many digits there are to the left of the decimal place. Another example: "0.0045" in scientific notation is "4.5E-3" but wouldn't it be better as "0.45E-2" where the negative exponent directly indicates zero padding to the right of the decimal place? Hmm...