Noise & Bit Growth

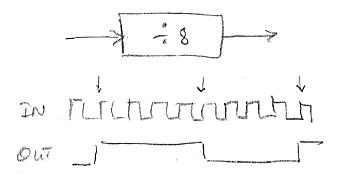

Pic from my post above on axis number acquisition:

You can see that we start out with tons of bits from the DPLL NCO, but if we examine the bits when the system is stable, we find that a whole lot of the least significant bits are changing quickly when nothing is otherwise going on, and therefore not very useful. Simply truncating the noisy bits away leaves us with too few bits for musical purposes, and gives a step-like or "zippery" non-smooth response to our hand movements. What to do?

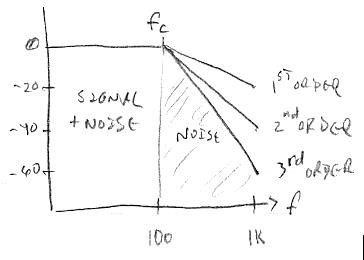

If we look closer at the unstable bits, we see that much of the bit flipping is happening really fast, in other words the noise has strong high frequency content. And we know that our senses will tolerate down to ~100Hz (or perhaps even less) of gestural bandwidth before noticing anything is awry (laggy, slow, delayed, etc.). So the first obvious move is to stick a 100Hz low pass filter on there, which allows our gestures to get through, unfortunately along with any noise in the 0Hz to 100Hz passband, but more importantly lops off the noise above 100Hz, and we discover that at the output of the filter we've got quite a few more stable bits to work with. Yay! Can we do better?

This may sound self-evident, but it took me forever to come to grips with conceptually: noise reduction is really all about reducing the area of the frequency response where the noise resides (duh). If your lowpass filter is first order, then the noise above the cutoff frequency will be attenuated at a rate of 20dB / decade. For a first order filter set to 100Hz, at the 10 times higher frequency of 1kHz the response is reduced by a factor of 10, or -20dB; the response at 100 times the cutoff frequency or 10kHz is reduced by a factor of 100, or -40dB, etc.. It's a simple 1:1 slope (when graphed log:log, and which I never learned in school). This downward sloping attenuation region above the cutoff frequency is known as the filter "stopband" or "skirt" and it is the area underneath the skirt which is letting in the noise. If we can increase the skirt slope we will reduce the area below the skirt, thus reducing noise without affecting our signal. The way to increase the skirt slope is to to employ a higher order filter, and this can be as simple as cascading first order filters. For each increase by one in the filter order we increase the skirt slope by -20dB / decade, so a second order filter gives us a -40dB / decade, a third order gives us -60dB / decade, etc.

If we stick a second first order lowpass filter after our first one we notice at its output that more bits are now stable, but the number of stable bits we've gained isn't nearly as dramatic as what our first filter gave us. Sticking a third first order lowpass filter after the second provides us with an even smaller increase in stable bits, so we've clearly entered into diminishing returns territory. Our first mild filter gave us a big bit gain by massively reducing the noise bandwidth, the subsequent filters bit gains were smaller and smaller because all they could do was fractionally decrease the noise via skirt area reduction.

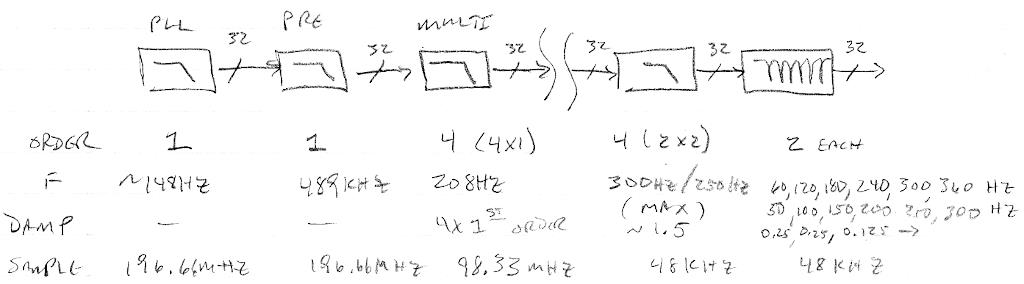

And as detailed in the post above, the filter order I ultimately picked for this filtering was based more on alias control at the rate changing interfaces than based on bit noise improvement. Though I of course employed the acceptable lowest gestural bandwidth limit of ~100Hz to start the skirts as soon as possible, and thus maximize the anti-aliasing action and bit noise reduction, and minimize the number of filters required. (I should also mention that cascading filters tends to lower the overall cutoff frequency of the result, so the individual cutoffs must be adjusted up somewhat, hence my use of 148Hz for the DPLL cutoff, and 208Hz for the "multi" filter cutoff above.)

In software, the variable 4th order lowpass filter does a bit of smoothing on our 48kHz down-sampled data, but more importantly squashes any 60Hz / 50Hz mains hum harmonics above the cutoff frequency, and this allows us to use fewer notch filters to clean up the remaining harmonic noise. Things are arranged so that the 4th order cutoff frequency is reduced proportional to the hand movement away from the antenna, thus squashing noise even more in this region of operation. This is clearly at the expense of gestural bandwidth in the far field, but the effect isn't obvious, and the far field kind of sucks anyway from a playability perspective on any Theremin due to a variety of issues (long-term null accuracy and therefore linearity due to drift, body movement, etc. and on analog Theremins there is often further non-linearity due to oscillator coupling).

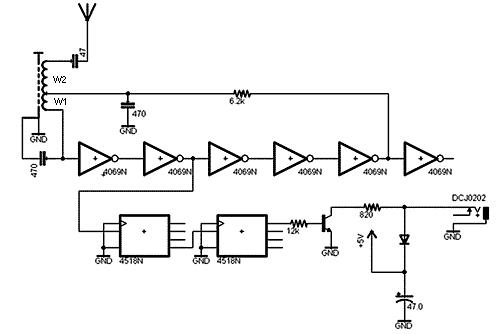

Finally, there are local interferers to deal with. I found the regular LCD updating was injecting a 12Hz spike into the axis response, so I changed the code to only update it when there was an on-screen data change and the spike disappeared. The LED tuner update is done at 48kHz which would likely be mostly filtered away, but just to be safe I made the PWM pseudo-random rather than counter-based, and I also massively jittered the data going to it in a pseudo-random way.

One other thing: there was a weird "traveling hump" in the axis spectrum that followed the audio oscillator pitch. I figure it was caused by rhythmic current draw in the FPGA core due to the NCO and filtering calculations going on to generate the audio. Arranging things so that the variable cutoff of the 4th order filter was always below the pitch killed the hump pretty much dead.

So, there you have it. Early on I was obsessed with theoretical ways to "grow the bits" as wide as possible. Practically, I found that I could rely on the DPLL itself and the anti-alias sampling rate reduction filters to do most of that sort of bulk filtering. But, in the end, mains filtering was found to be a major component of the overall noise, and special attention also had to be paid to local interferers. I found that there was plenty of noise below the 100Hz cutoff that had to be dealt with on a case-by-case basis with special filtering / randomizing.

The D-Lev environmental filtering and local interference reduction is so effective that I'm seeing individual data steps on the LED tuner where the resolution totally poops out (at null). Before I took these various steps at mitigation I was seeing mostly noise there. I wish I could have known up-front that noise reduction is much more important than increasing the resolution via averaging, I could have easily shaved a year or two off of my investigations / efforts. Resolution by itself isn't useful without effective noise reduction, and resolution will just "happen" if you start with a reasonable number of bits and do routine filtering. And the extreme far field (0.6m to 1.0m) is kinda crap no matter what you do, and nobody plays there anyway (not with any precision), so it's not worth obsessing over.