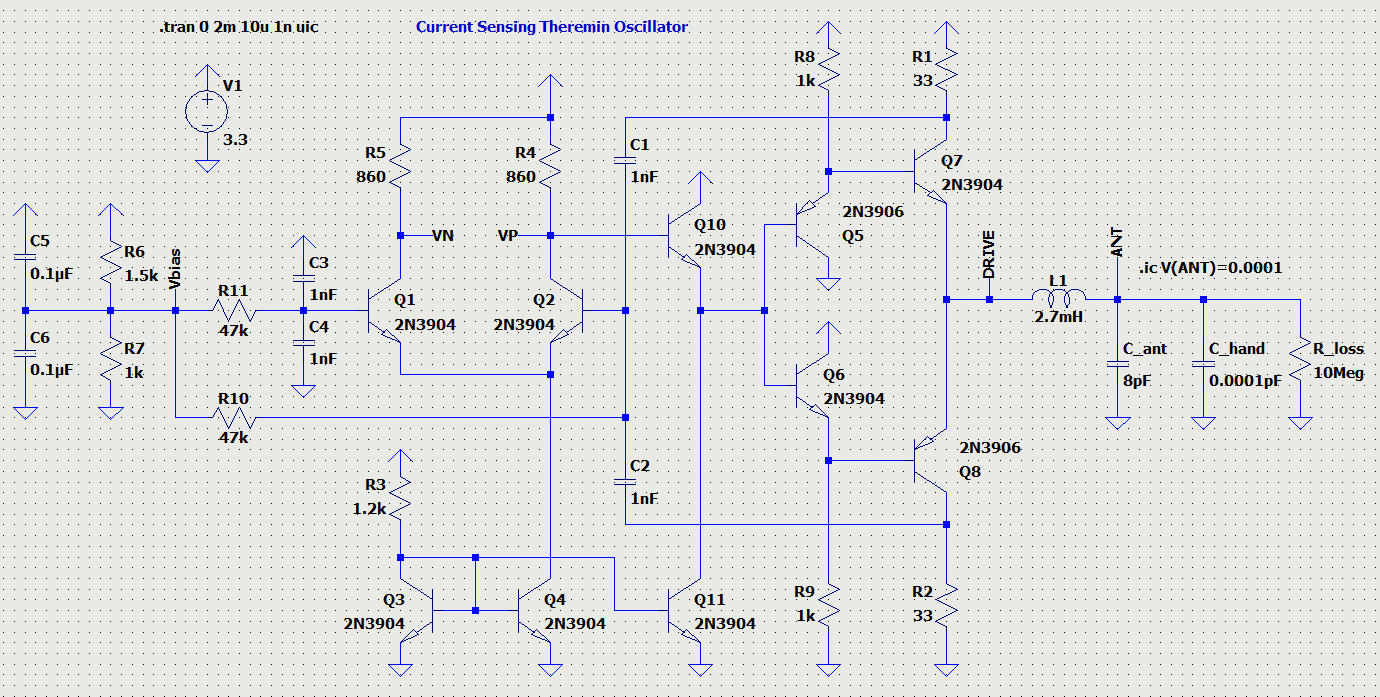

Trying to simulate main hum noise in LTSpice.

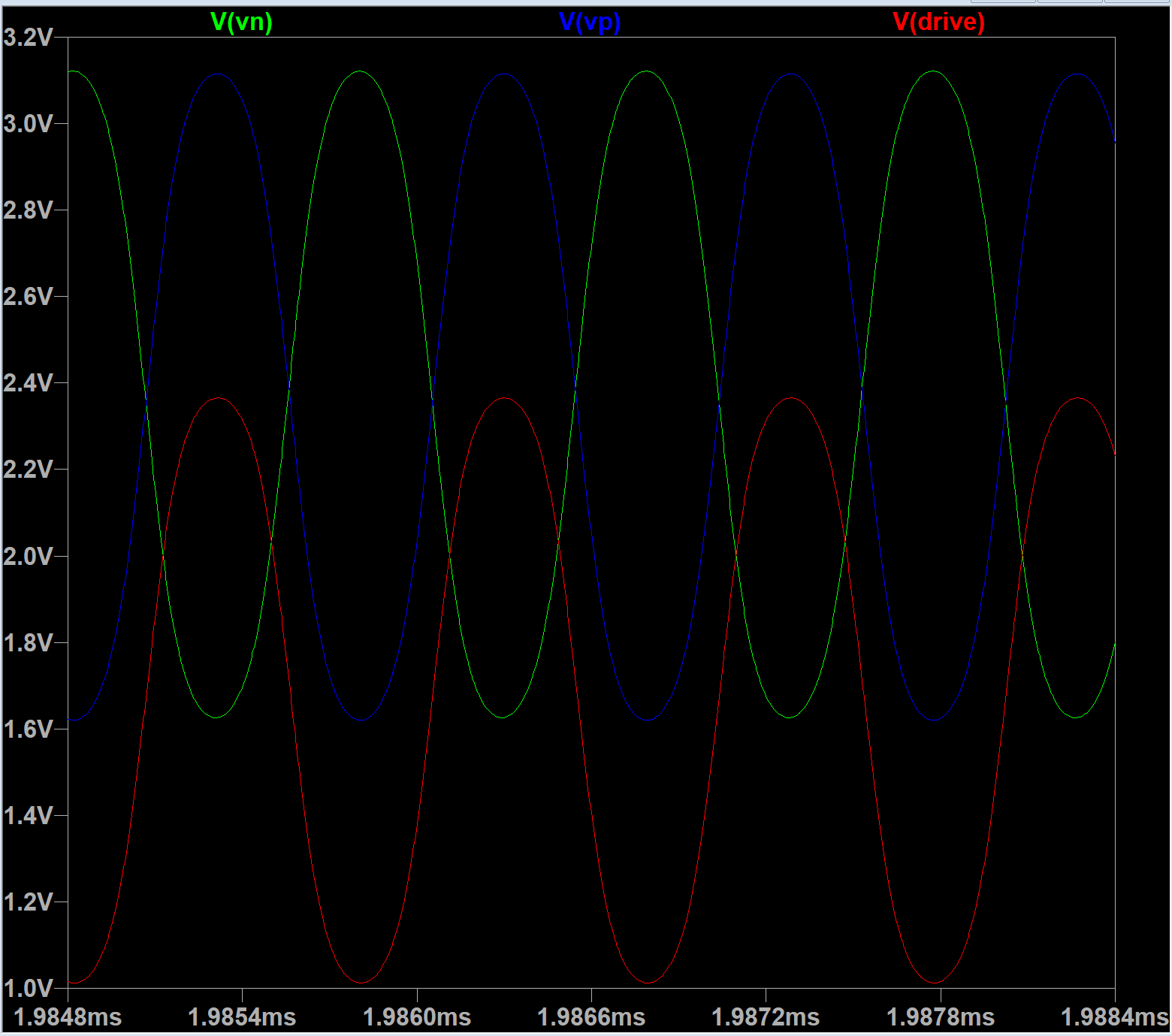

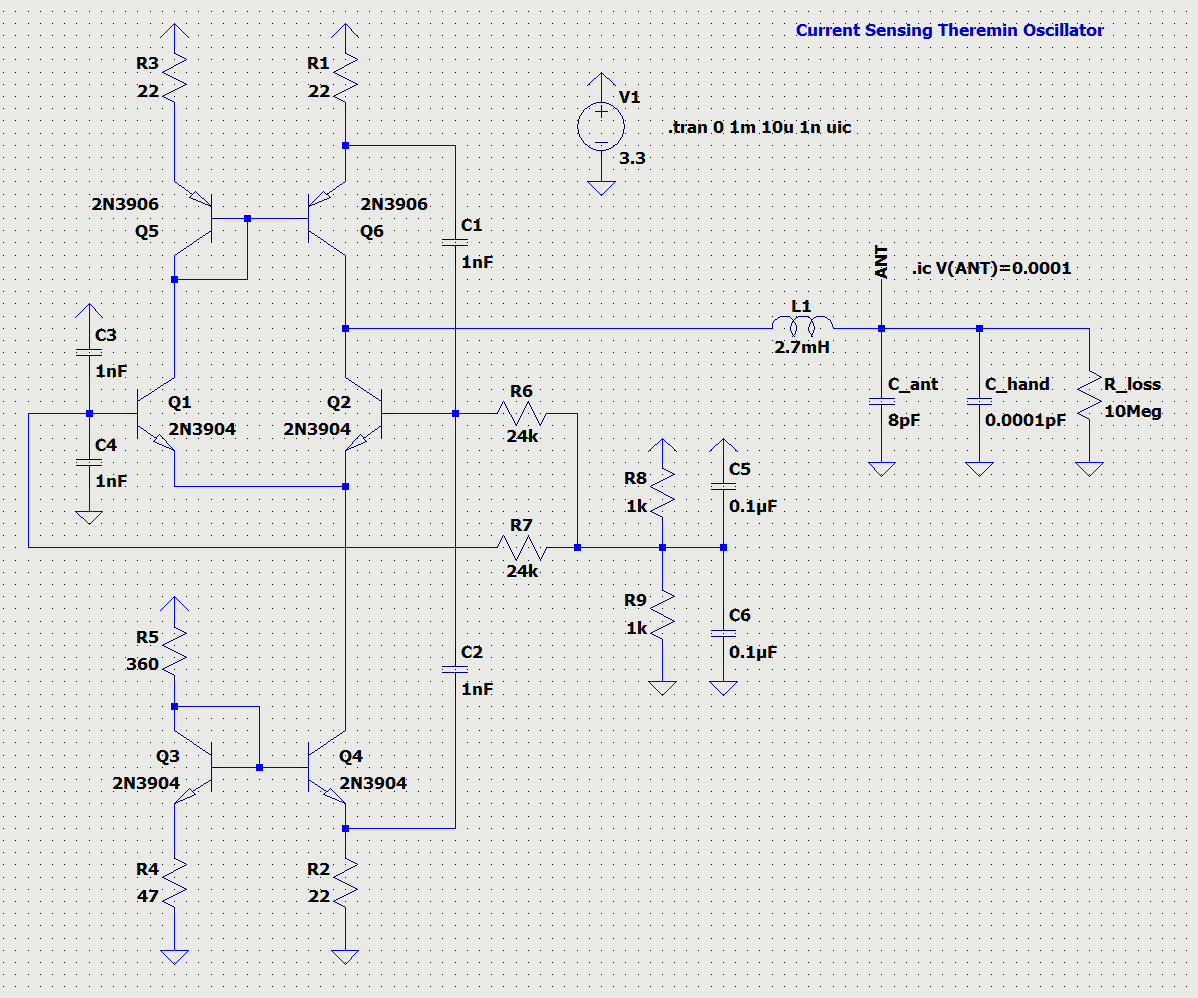

Using my recent model with 4 BJT both rail sides current sensing, and dual unbuffered LVC inverters as drive.

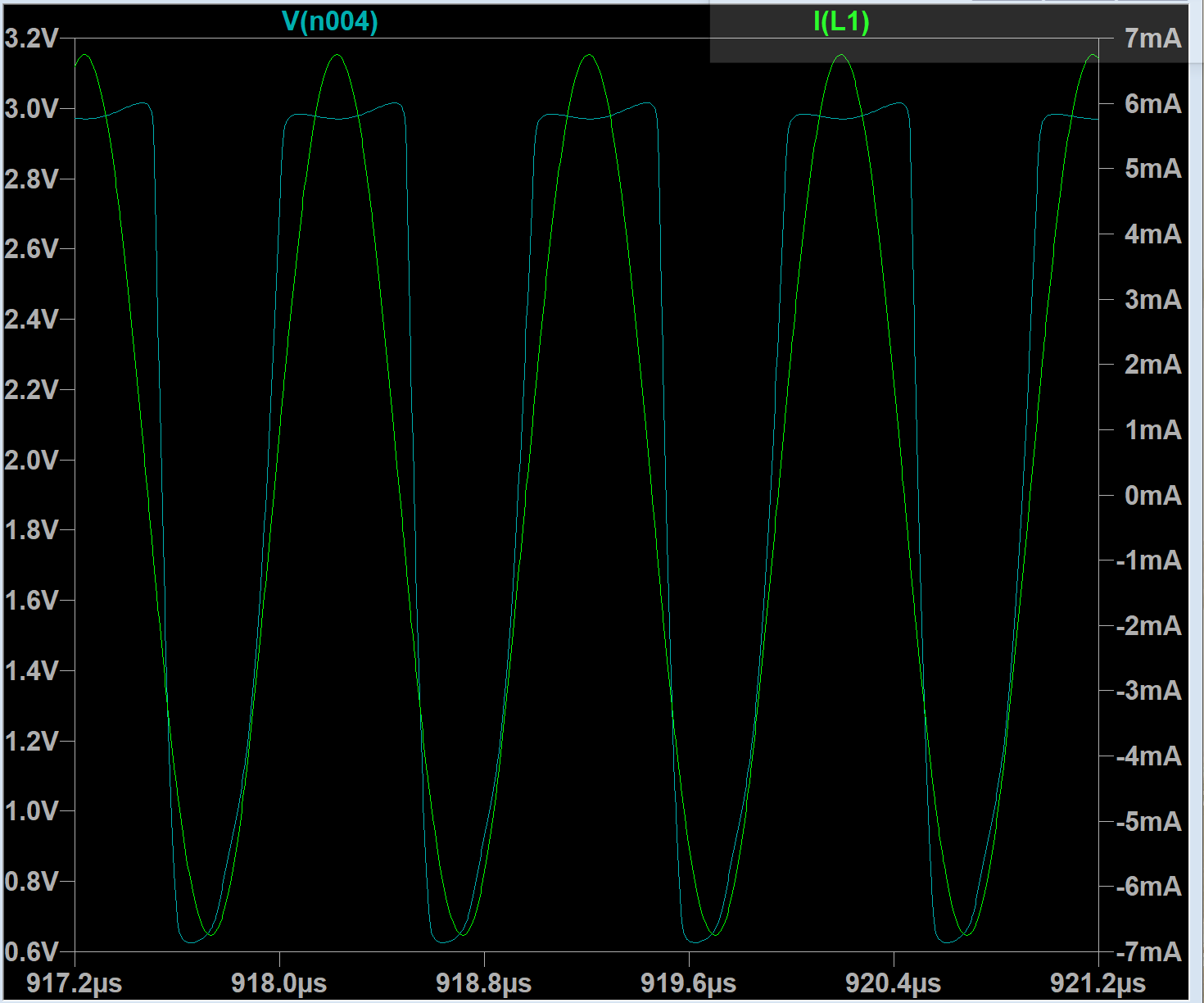

Connected C_ant to 100Hz 240Vpp voltage noise simulation instead of GND. 100Hz is used instead of 50/60Hz to speed up simulation. It should be better visible in oscillator anyway.

Noise period is 10ms. Let's try to simulate scope with trigger delay 1/2 of main hum period = 5ms.

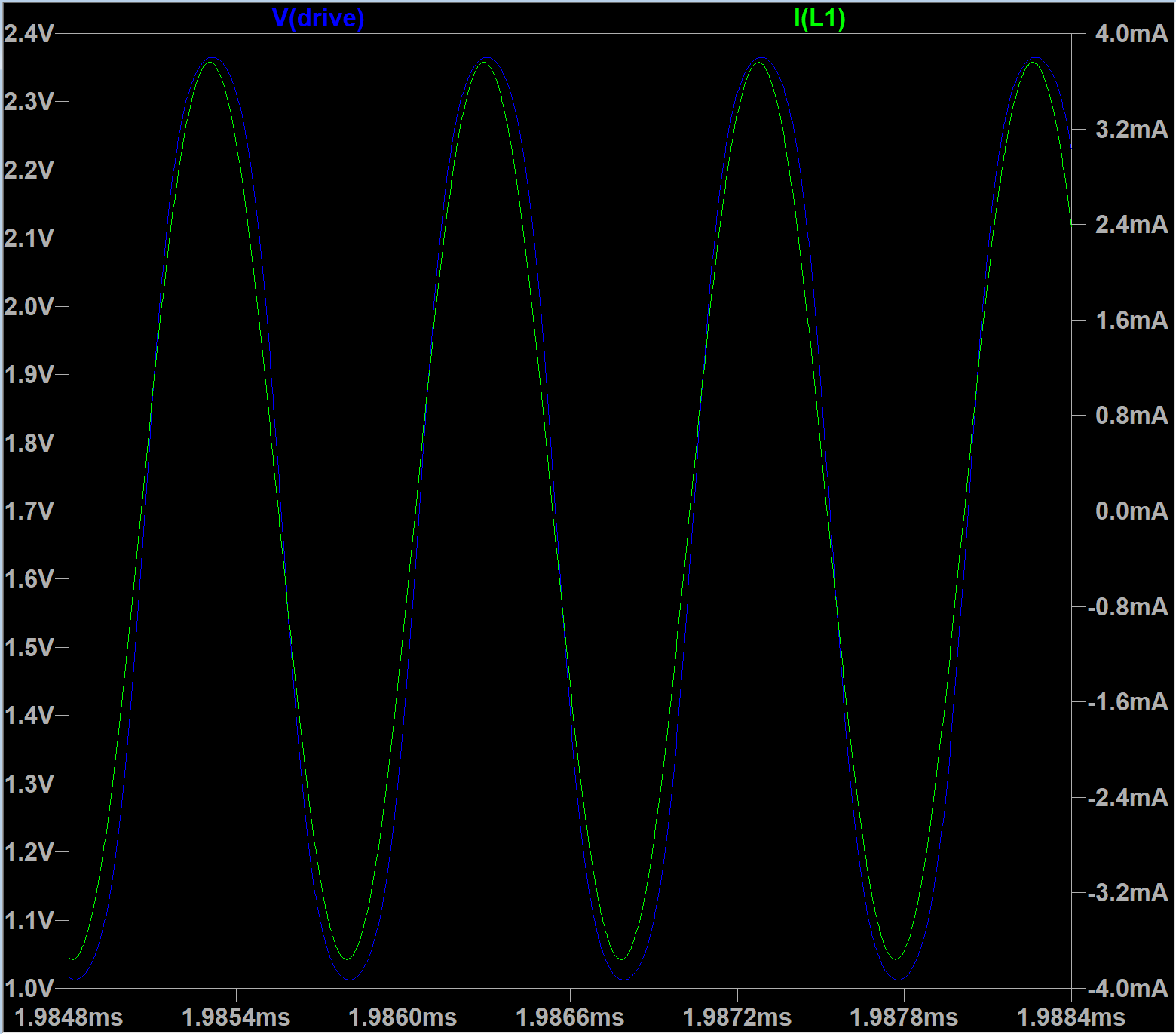

Steps:

1) go to starting point 1ms

2) find nearest raising edge intersection with 1.65V: point 1.000667683

3) add half of noise period = 5ms

4) display current waveform for found point + 2us (in our case it's 6.000667683ms to 6.002667683ms, voltage axis set to 0.8..2.5V)

5) take screenshot of waveform, paste as new layer in GIMP

6) repeat steps 1..5 for other points, with 1ms steps

After adding new layer, align it above layer below based on plot frame

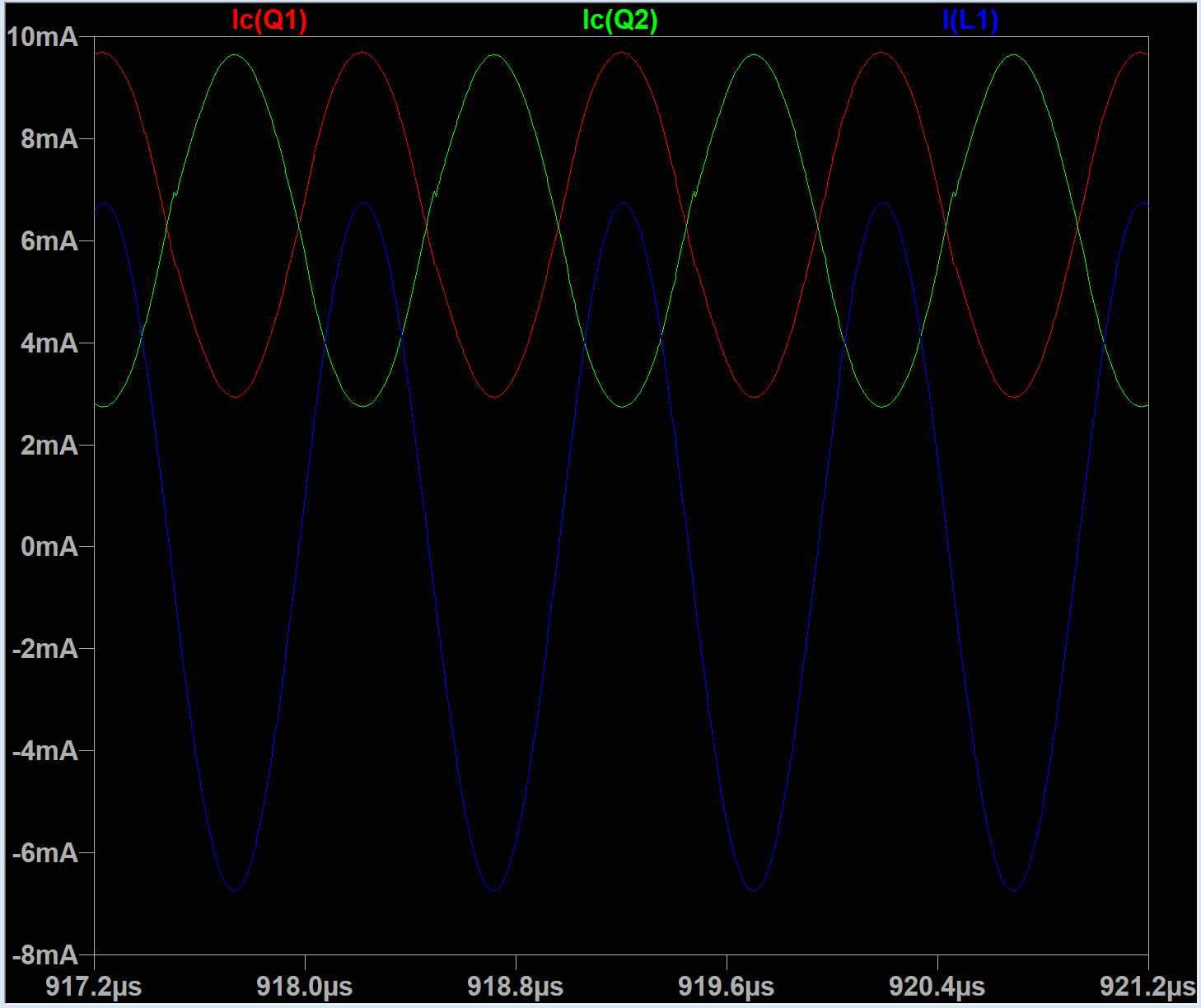

As a result, I've taken waveforms for 5 time intervals:

6.000667683ms 6.002667683ms

7.000366957ms 7.002366957ms

8.000066231ms 8.002066231ms

9.000858077ms 9.002858077ms

10.000557358ms 10.002557358ms

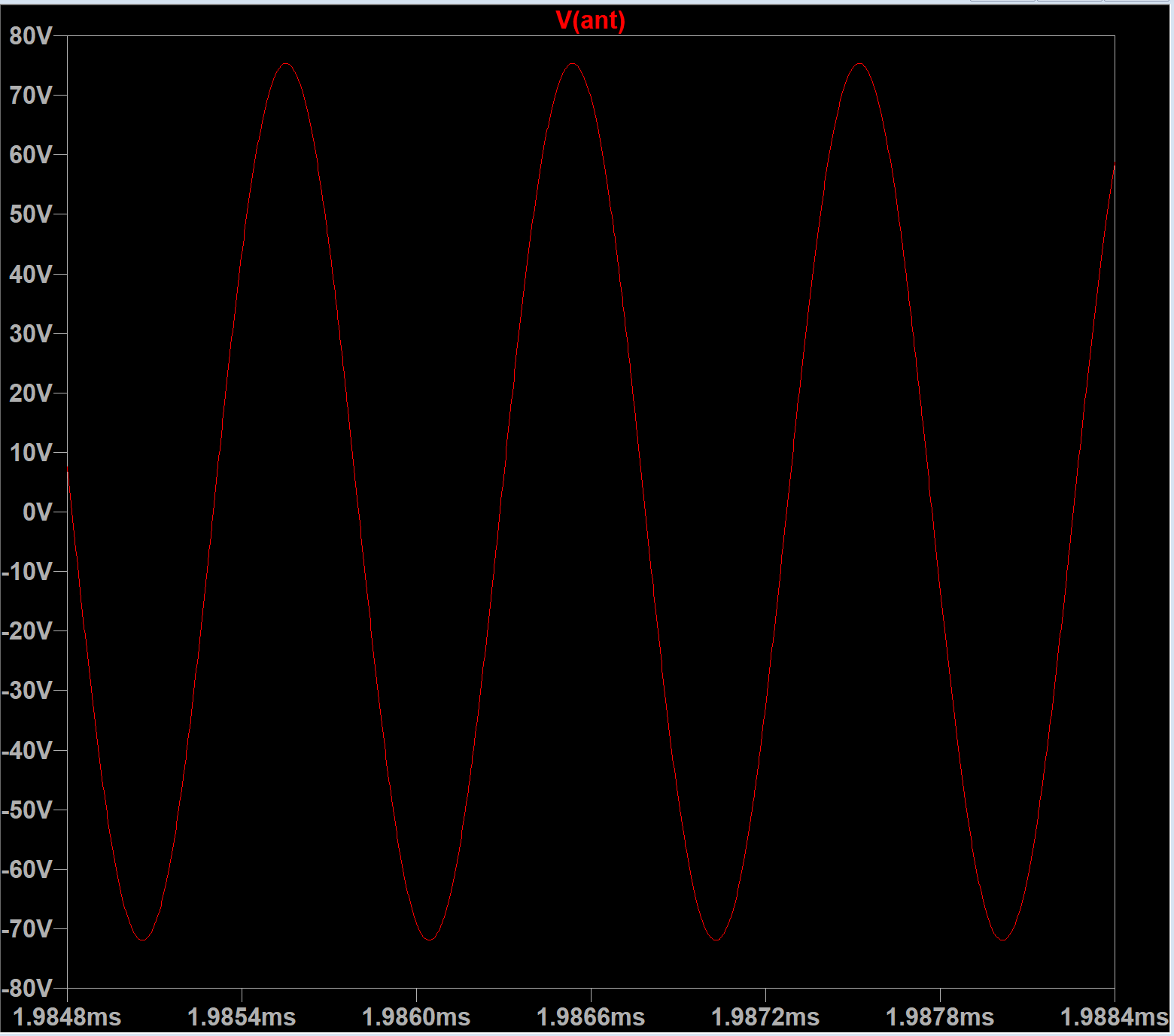

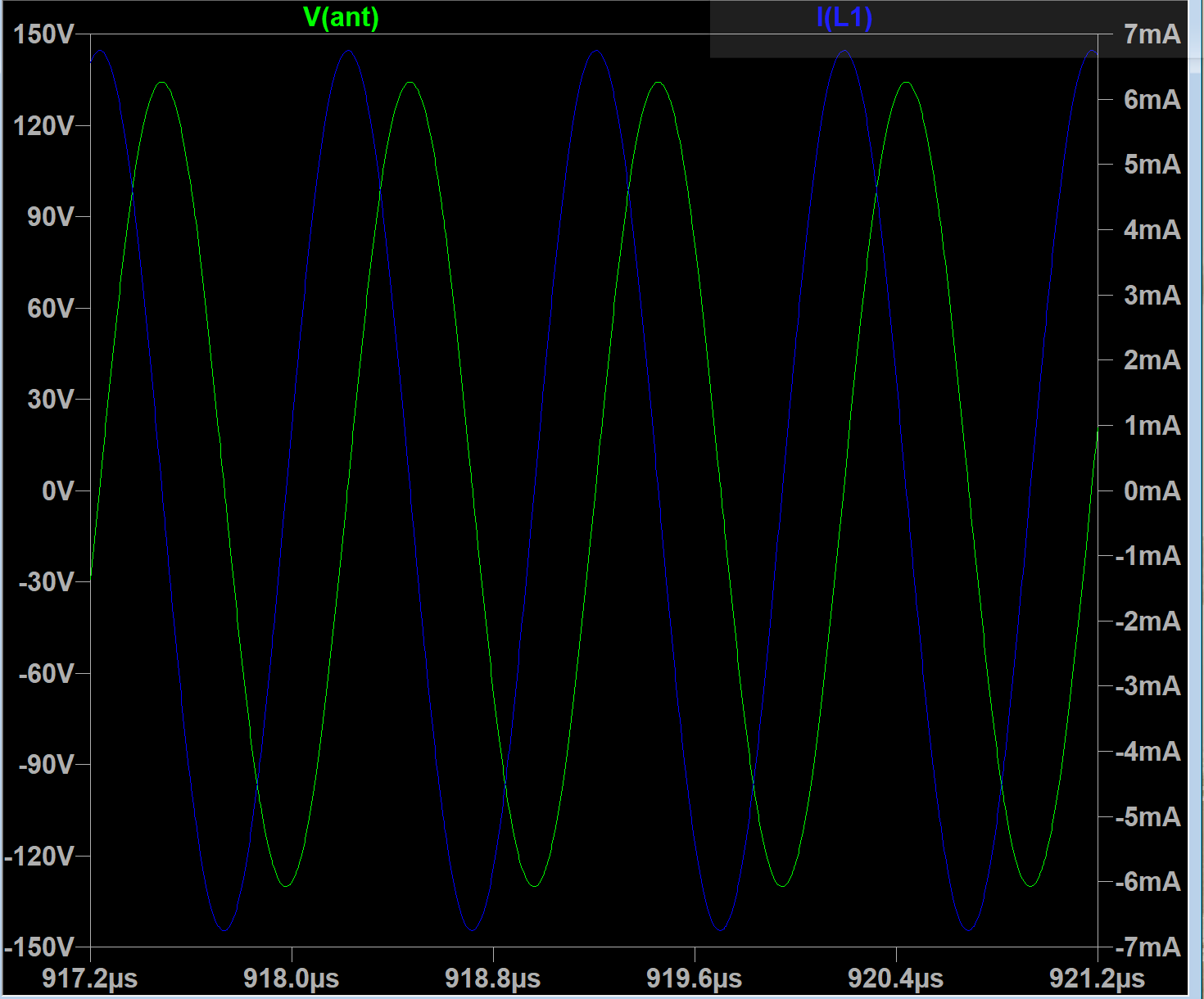

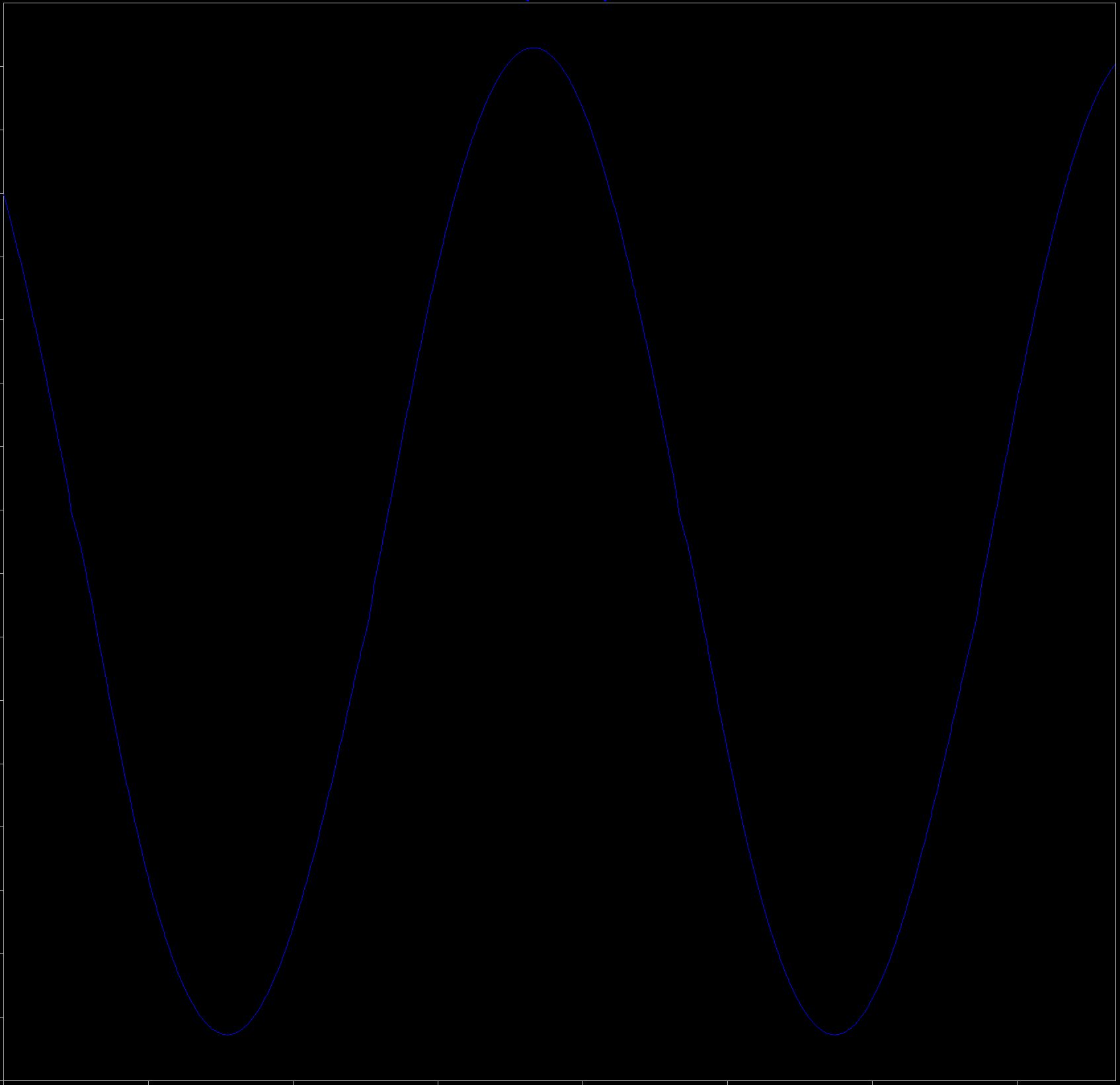

Resulting image for single trace (at 6.000667683ms)

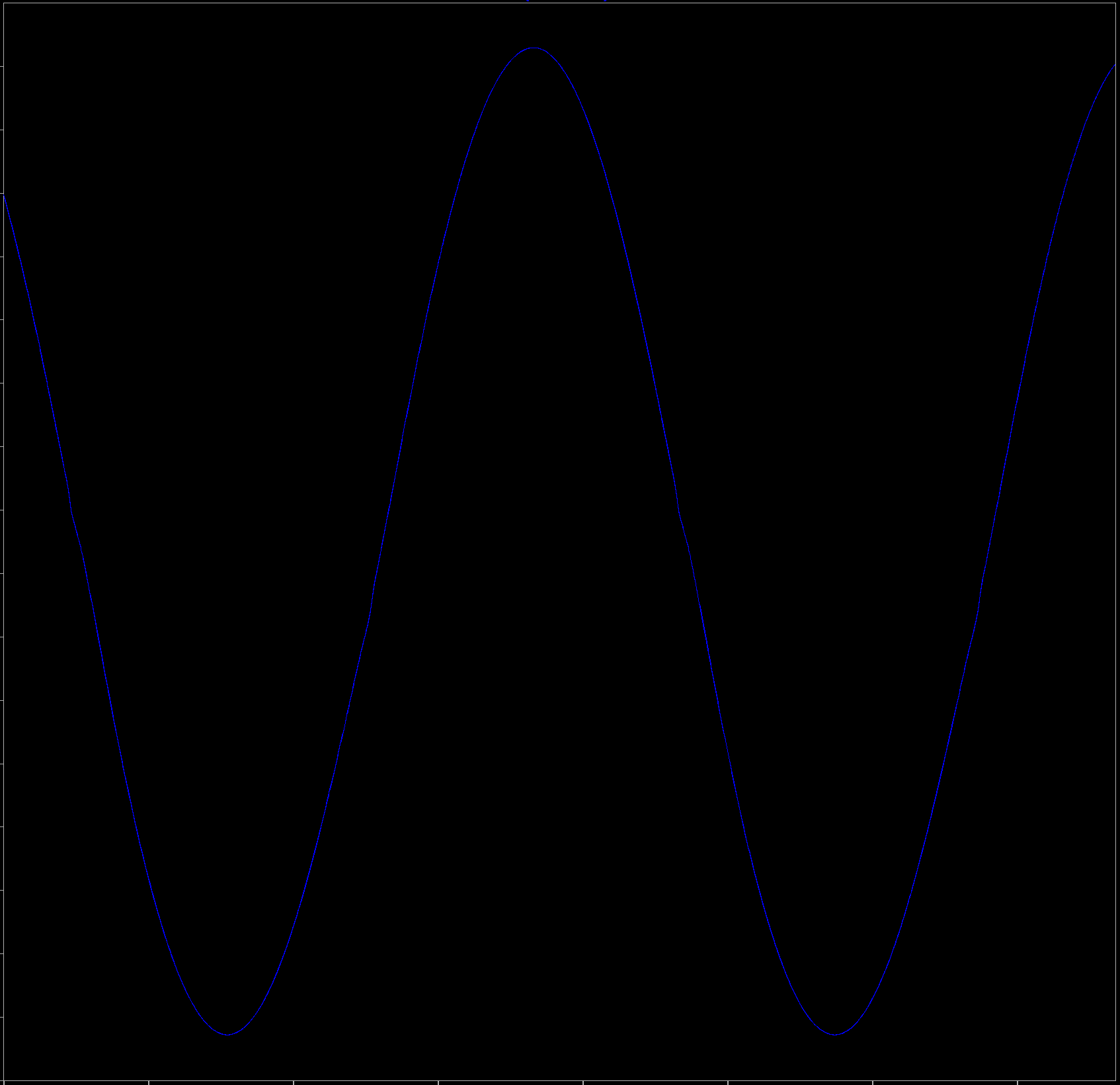

5 layers combined with "lighten" mode

I don't see any visible noise. It looks like main hum is not a changing "world" potential.

What next? Any ideas? I can try replacing VCC with 60Hz sine, but not sure if it would help to reproduce the issue with main hum noise.

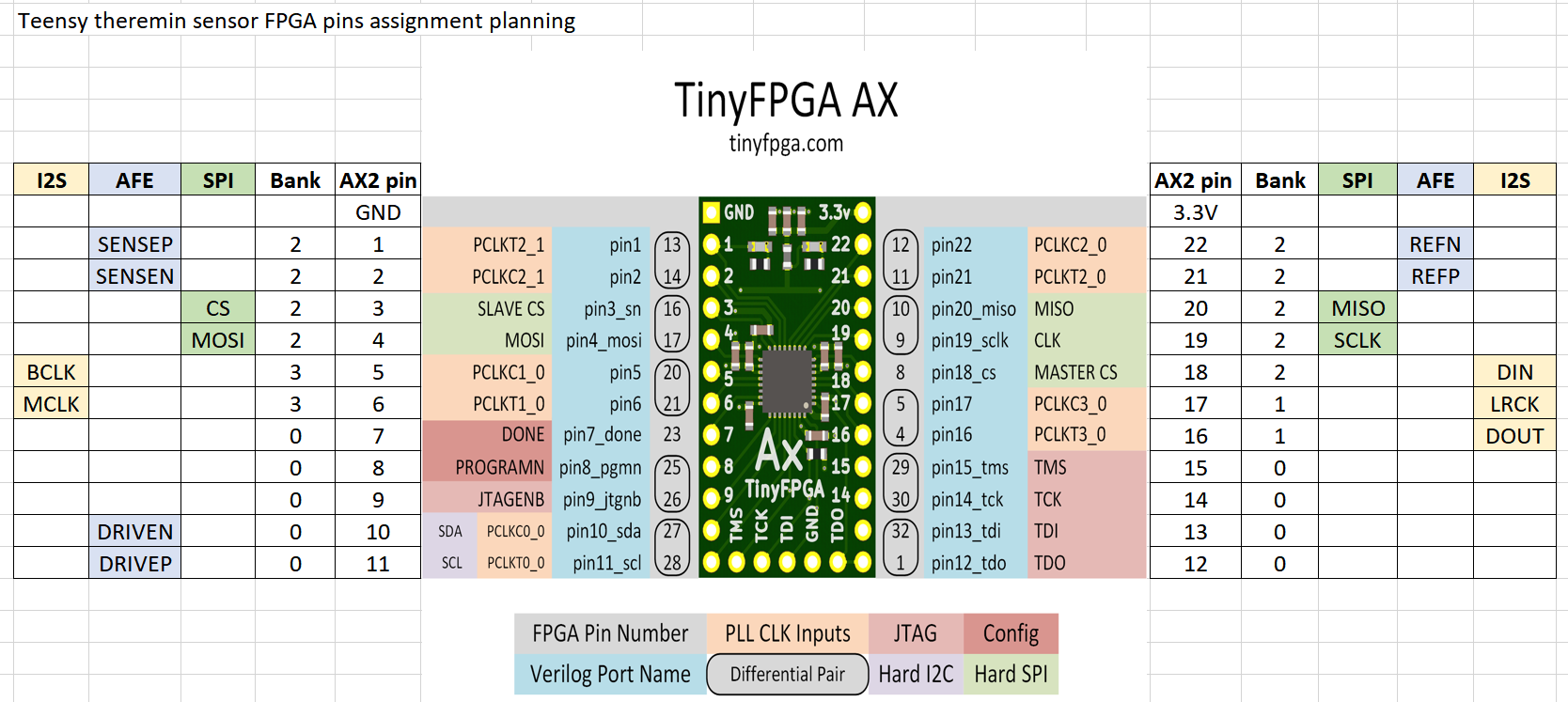

I think it would need to be a bi-directional serial interface, like SPI, so you can set things like dither strength. For portability (and because I'm a control freak) I prefer to write my own interfaces in SV rather than use hard coded logic blocks. Then you can do anything as long as both ends agree on the protocol.

For FPGA main device, we can use any protocol we can imagine.

For MCU, some standard one, like SPI, I2C, I2S, is preferred since it has hardware support, and would not consume a lot of CPU resources.

Moving functionality off of the main FPGA would require some thought as to timing and synchronization. When you've got a single clock inside a single FPGA everything can be fairly straightforward (if you have enough internal PLLs).

There are two parts of sensor to main board interface: configuration and realtime axis data.

Configuration includes FPGA configuration (bitstream) programming, and sensor parameters - like min/max freq range for PLL, scaling / linearizing parameters, etc. during calibration

Configuration parameters are rarely changed, and slow interface would work ok.

Realtime interface should be fast enough, and consume as little CPU as possible.

For each audio frame (e.g. once per 600 samples) we should poll axis values from two sensors.

SPI or I2C is probably ok, but I'm looking for I2S stream.

There is I2S audio input mostly useless in theremin. We can stream axis values one per sample, and they would be read by MCU using DMA.

I saw example of Maxh XO2 configuration update via I2C, so probably for calibration params it might work as well.

Not sure if two XO2 can share the same I2C interface. Is it possible to assign address?

For relatively low rate I2S bit clock (like 768KHz) it's easy to resolve all synchronization issues.

It makes sense to clock XO FPGA from external clock - e.g. from MCLK (~12.288MHz) signal of I2S - generated by MCU audio interface.

SysClock PLL of XO2 would generate all necessary internal clocks.

I'm going to design sensor with LVDS interfacing between DPLL FPGA and analog front end - in hope that it can reduce noise.

As well, we can use separate regulators - for FPGA, AFE, and even drive and sensing parts of AFE.

Vadim, the world seems to be crying out for a solid, full-featured, inexpensive Theremin, which you and I know is clearly doable with current technology. I think FPGA-based LC DPLLs coupled to a cheap multi-core processor, with a touchscreen rather than encoders, might be the best way to go. I hate to lash anything to the shifting sands of consumer computational solutions, but a cheap 7" tablet running an app comes to mind (otherwise one is re-inventing the wheel to some degree - though often that wheel could use some re-inventing, if only for increased control). I'd really like to contribute to such a project, I'd certainly be up for porting all of my D-Lev code to it.

It would be great if you joined this project. You already contributes a lot by oscillators and sensors discussions.

Now I like Teensy based theremin idea - with tiny FPGAs for sensors. Using of FPGA DPLL sensors can eliminate the reason for main FPGA device.

iMXRT ARM core at 1GHz with ~1.5 instructions per cycle and using of tightly coupled memory (2 ports - instruction + data at the same cycle, or reading of two instructions w/o cache delays) exceeds D-Lev systhesizer power and gives a lot of space for experiments.

I believe D-lev synthesizer may be easy ported from HIVE ASM to C. Some of presets like human voices are really awesome.

GUI/controls - not a problem when programming in C/C++.

Teensy can execute one floating point instruction per cycle (with 4-byte float). Can we benefit from FP in some DSP algorithms?

LCD touch is pretty useful IMHO. Usual tablet most likely has capacitive touch and will suffer from 500Vpp antennas nearby.

Connecting of LCD with internal resistive touch and display SPI interface controllers seems ok for me.

2.8 or 3.2inch screens offered for teensy are probably good.

Anyway, I'm planning to have several encoders and pots, just in case - sometimes hardware controls are better than touch interface.