Rhythm Method

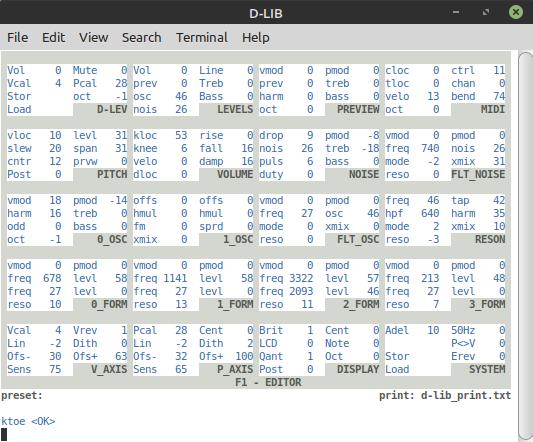

I've got an action plan, and not too surprisingly it boils down to more or less a pared-down version of the Open.Theremin approach - so very nice that their code is open source!

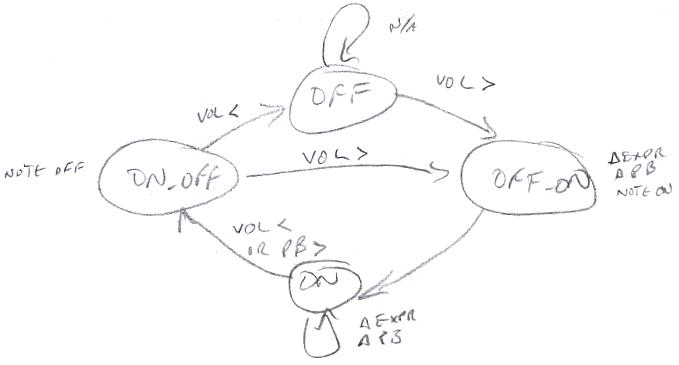

It's sort of a 4-state state machine, though only 2 states - the note on state and the note off state - can be entered @ IRQ; the other two states are transitional.

Starting at OFF we wait until the volume axis exceeds some threshold and then we transition to the OFF_ON state.

In the OFF_ON state we calculate the EXPR expression pedal and PB pitch bend values, transmit them if they have changed, transmit a MIDI note on, and then transition to the ON state - and then are done with a single IRQ's work.

Entering the ON state on the next IRQ we again calculate EXPR and PB and transmit them if there is any change. If the volume axis drops below the threshold we transition to the ON_OFF state where upon a MIDI note off is transmitted, and we transition to the OFF state whereupon we are done with the IRQ. A second trigger for the ON => ON_OFF state transition is when the pitch bend distance exceeds some agreed-upon (between MIDI TX and RX - manual settings) fixed amount such as +/-12 notes. In that case we do ON => ON_OFF => OFF_ON => ON, which turns off the note, sends new EXPR & PB data, and starts a new note, finishing the IRQ. This entire state transition transmits 15 bytes (I think we want to transmit 14 bit EXPR data rather than 7 bit data, and we can use MIDI "running status" to shave off the second status byte here) which takes 15 * 320us = 4.8ms, or a frequency of 208Hz. Here's the breakdown:

note-off = 3 bytes

EXPR14 = 5 bytes

PB(14) = 4 bytes

note-on = 3 bytes

So I'm wondering aloud what - if anything - the receive side might be doing to smooth out this rather slow / sparse data stream? Software folks who write synth code probably know enough about DSP to recognize this as a sampling scenario and treat it as such, but what about all the OS code in between "managing" the MIDI stream? I don't know if I should meter/time the spaces between data bytes, MIDI commands, clumps of MIDI command, or globally. Or if I should meter it at all? If it were me on the other side I think I'd want related clumps coming in at a fixed rate, and always coming in regardless of change, just to dodge a fractional rate resampling type scenario. But that would generate a lot of MIDI traffic and wouldn't update the parameters as fast as possible. It's kind of crazy that we have to worry about MIDI bandwidth at all.

Not addressed here is MIDI note-on velocity, which doesn't make a lot of sense for wind or Theremin type controllers, as the new note is selected a significant time before it is played. I suppose I'll have to come up with something, but it's an ill-fitting parameter in this scenario, and I'm anticipating trouble. MIDI is massively keyboard-centric; even the breath controller parameter, which I believe was included for the DX7, is generally ignored by synths, adding to the ill-fitting guessing game. EXPR is an expression pedal input, and the spec says it's for individual note volume, but it seems to be used (here and there!) to control timbre-type dynamics too. Ah well, gotta start somewhere.

[EDIT] Should have mentioned pitch bend, which is 14 bits of data. If we set the PB range to +/-1 octave this is 24 notes, and 2^14 / 24 = 682. So sub-note resolution is 682 here, and the ear is at most sensitive to 3 cents, or 33. It seems we could easily do +/-2 octaves and above here and largely avoid weird note retriggering at the PB limits. A lot of it depends on how many bits are actually utilized (not truncated or ignored) on the RX end and, again, how the data is filtered in time.